Abstract

As the signature pedagogy of social work education, field education is a critical and complicated aspect of program development. Effectively managing this complex process is a priority and requires a significant amount of administrative activity to maintain compliance and manage experiences for all stakeholders. While countless field placement software platforms are available to streamline processes and improve efficiencies, little guidance is available to support programs to strategically evaluate, select, and implement a software platform. In this article, the authors provide a model for vetting field placement software using a case study. The article concludes with implications for other universities considering adopting software to manage placements within their social work field education departments.

Keywords: social work; field education; placement software; technology; case study

Introduction

In social work education, field education is a mandatory requirement where students weave knowledge, skills, and values learned from didactic courses into their practice with clients and communities in agency-based, supervised settings (Council on Social Work Education [CSWE], 2015). On top of all the relational work and supervision needed to prepare students for this endeavor, social work programs devote a significant amount of administrative activity to manage the student educational experience, the community partner experience, faculty gatekeeping responsibilities, and programmatic requirements. This process is further complicated by the growth of online social work programs, requiring field educators to manage field education from a distance (CSWE, 2018; Hitchcock, Sage, & Smyth, 2019). While these challenges are not new to social work education, the way field educators are addressing these challenges is evolving, in part due to the rise in digital technologies. Assessment and field placement software programs are readily available to support communication. Field placement software is a type of computer program, often web-based, that provides streamlined access for students, field instructors, and administrators to gather and store information, submit documentation, and obtain reportable data, among other functionality. In this article, the authors provide a model for vetting field placement software using a case study.

Why Field Placement Software?

Social work field departments manage a series of competing demands throughout the practicum experience, which requires coordination between many stakeholders (Buck, Bradley, Robb, & Kirzner, 2012; Buck, Fletcher, & Bradley, 2016; Hunter, Moen, & Raskin, 2016; Wertheimer & Sodhi, 2014). The primary job of the field education office is to facilitate the experiential learning requirements of students and managing the signature pedagogy that makes social work stand apart from other social sciences degrees. They must also manage all the administrative tasks associated with assessing student availability for placement; managing student preferred placement requests; monitoring student professional behaviors outside the campus setting; recruiting and retaining competent field supervisors and qualified agencies; and attending to accreditation and programmatic needs. Directors and instructors of field education must orchestrate timelines and tracking related to student eligibility, agency availability, and confirmation of required clearances and orientation/training. Many institutions develop a set of standard operating procedures or protocols to effectively pass students through each phase while communicating progress with each stakeholder. Documentation is extensive, and typically includes collecting student applications to enter field, affiliation agreements with agencies, learning contracts, tracking student hours in field, and tracking student competencies for accreditation reporting. Field education faculty are also responsible for surveying agencies, faculty, and students to maintain standards and for continuous improvement efforts. All this work is needed to support the field education experience, separate and apart from the curricular component and active learning that occurs in the field.

Historically, these processes were often managed via paper or by using different computing platforms such as spreadsheets and word documentation software, as well as phone or email communications. Field programs set up large, digital databases with essential information about students, field instructors, and agencies. However, these processes become difficult to manage over time, and lack integration with other institutional software systems. Additionally, standards issued by the Council on Social Work Education (CSWE) and the National Association of Social Workers (NASW), as well as department choices about which outcomes to assess for accreditation, require additional categories of information that should be tracked (CSWE, 2015; National Association of Social Workers [NASW], 2017a). For example, in order to meet CSWE’s accreditation standards, field education must be “systematically designed, supervised, coordinated, and evaluated” (CSWE, 2015, p. 12), and measure progress toward the program’s social work competencies. These requirements led many field offices to increasingly invest in commercial data management systems starting in 2008, when CSWE first required social work programs to report their assessment data to the public, as well as demonstrate how they use the data to improve and innovate student learning and curricula (CSWE, 2008, 2015; Hitchcock et al., 2019). Similarly, the NASW (2017b) Standards for Technology in Social Work Practice require field educators ensure digital records are secure, and that all stakeholders are informed about the security of field-related data, which requires knowledge and implementation of increasingly complex security features. More and more, field offices must make complex decisions about how to integrate field placement platforms into their programs.

Considerations for Selecting Field Placement Software

There is little guidance from the social work accreditation body or in the literature about how to choose field placement software that helps manage the demands of field education. However, specific guidance is also difficult because software choices depend on the demographics of the social work program, such as regional or geographic factors (i.e., whether all state schools cooperate to share a software license), and the number of stakeholders to organize (i.e., field faculty and liaisons, site supervisors), and the program size. Two primary field placement software options exist for field educators: creating an in-house program designed in partnership with an information technology (IT) department or purchasing an already developed software package. Because the in-house options require development costs (i.e., time) and on-going technical support from within the institution (i.e., software updates), many programs find it easier to contract with a software vendor and purchase a field placement software platform (Hitchcock et al., 2019).

Field placement software platforms are often web-based, and provide access for students, field instructors, and administrators that enable them to gather and store information, submit documentation, and pull reportable data. Countless options and tools within these platforms help manage the placement process. The decision to utilize one of these software programs can be daunting, depending on the size, budget, and the available resources of each social work program. Table 1, accurate at the time of this writing, offers a non-exhaustive list of examples of these platforms. These software offerings are constantly changing due to the nature of the rapidly emerging technological environment and are sometimes designed primarily for majors outside of social work.

Table 1: List of Field Placement Software Vendors that Can Be Considered for Social Work Field Education

| Name of Software | Website | Name of Company |

| Chalk & Wire | campuslabs.com/chalk-and-wire | CampusLabs |

| EMedley | emedley.com | AllofE |

| E*Value | medhub.com/evalue/evalue-product | MedHub |

| Exxat | exxat.com | Exxat |

| foliotek | foliotek.com | Foliotek, Inc. |

| G Suite (Google Productivity Suite) | gsuite.google.com | |

| InPlace | inplacesoftware.com | QuantumIT |

| Intern Placement Tracking (IPT) | alceasoftware.com/web/login.php | Alcea Software |

| Sonia | sonia.com.au | Planet Software |

| Tevera | tevera.com | Procentive |

| Time2Track | time2track.com | Liaison |

| Tk20/TaskStream/Livetext – Watermark | watermarkinsights.com | Watermark |

| Typhon | typhongroup.com | Typhon Group |

When selecting a field placement software, practical considerations for field education include the number of students and types of tracking required, as well as the features available within the software itself. Table 2 offers a list of commonly available features of field placement software. Additionally, field programs must consider the resource costs associated with any field placement software, such as financial cost of the software for students and/or the institution, time required by faculty and staff to utilize a new system, and training requirements for all stakeholders, especially students and field instructors.

Table 2: Sample of Digital Features and Tools From Field Placement Software

- Data storage and retrieval

- Document tracking

- Filtering and matching student and agency attributes

- Assessment of learning outcomes

- Off-site (non-university) log-in

- Interoperability with other software or databases

- Electronic forms

- Surveys

- Bulk emailing and email merging options

- Automatically generated emails for placement interviews

- Data and outcome reporting

- Dashboards

- Import and export features

- Reports

- Compatibility with other common software programs in higher education such as learning management systems

Adapted with permission from Hitchcock et al. (2019)

The purpose of this article is to present a case study about how the first author, a field director, vetted and selected field placement software for her Department of Social Work. A case study methodology was chosen due to the nature of the research question: How do social work field educators effectively evaluate, select, and implement a software platform to manage field education? According to Schramm (1971), a case study “tries to illuminate a decision or set of decisions: why they were taken, how they were implemented, and with what result.” Case study research is relevant when the research question(s) seek to explain a complex social phenomenon in-depth and within a real-world context (Yin, 2018). Due to the lack of research available in this area, the authors chose a descriptive case study framework to describe the complexity of the processes and identify potential strategies to solve the issue. One significant strength of using case study design is that it allows processes to be tracked over time, in this case many months of research (Yin, 2018).

In striving for external validity, the case study design is intended to document the decision-making processes and develop generalizable lessons for other programs exploring field placement software.

This case study describes the process of choosing field placement software for the field education component of a new Bachelor of Science in Social Work (BSSW) program. The university setting is a large, private online university with plans to admit students nationally. Along with providing details about the process of selecting the needed software, the case study informs a series of recommendations for other social work programs who may be considering adopting vendor-supported databases, including a field technology assessment checklist.

Case Study:

A Field Director’s Experience of Choosing Field Placement Software

As a field educator with experience across several institutions both small and large, I knew that selecting the right field software was a foundational component of developing the program. I found that little guidance is offered through field education listservs or literature to support the vetting process of systematically selecting a platform. There are countless vendors who attend social work educational conferences and request time to provide demonstrations of their platforms. It was tempting to accept these invitations to see what they have to offer, and easy to become lulled by the attractive features presented and promises made during the sales pitch. Following one of these demonstrations, I realized that certain features or digital tools offered by vendors were essential to my field education program, but I was unsure how to choose the best platform. I also knew I needed to consider the needs of the internal university stakeholders, who needed access to specific field education data for reporting and managing workflows.

Prior to scheduling demonstrations with vendors, I realized the need to determine what gaps existed in the current placement processes. I began to create a list of the current tools and in-house resources used to manage internships across the university to see if there were ways to maximize those resources to better meet our program’s needs. This list of existing tools also helped organize all the institutional software programs we would need to integrate when the final vendor was chosen. For instance, our curricular dashboards alert us when students become eligible for field education based on prerequisites, and we needed to know how this system would interface with a new field placement software.

I wanted to develop a clear picture of what was ineffective or inefficient in our current internship tracking processes in other disciplines (Counseling, Human Services, Education) from multiple perspectives: student, agency, faculty, and administrative. I leaned on our IT department to inform me of the technical assistance calls made by these stakeholders who encountered issues when logging field hours, submitting evaluations, and completing other field-related tasks. While meeting with the field placement team, we discussed students’ most frequently asked questions, and the challenges students encountered with field technology. Of the themes raised as frequent challenges for students, the most common was completing required paperwork across multiple platforms that were technically complex. I identified several new areas of need, such as easier access to reportable data, and limiting exposure to regulatory risks.

I began to develop a list of needs based on my institution’s resources and the needs of our field office. I considered the following factors: cost, functionality, accessibility, legal, regulatory, data and reporting needs, training, tech support, and integration with other university systems including our Learning Management System (LMS), Blackboard Ultra. See Table 3 for the checklist of all these considerations. As I identified these needs, I had to determine whether they were significant enough needs to justify costs associated with addressing the factors. My leadership team wanted me to demonstrate the costs and benefits of the new vendor contract, so it was important for me to document the concerns with the current processes. Knowing that the process to manage this change would be time-intensive to implement, I needed to ensure that the needs were great enough to substantiate the changes.

Next, I communicated with all relevant university stakeholders. This included the university IT team, the accessibility services office, legal and regulatory leaders, and faculty and field staff. These stakeholders offered additional feedback on the gaps within the current process, features that may be helpful, and other systems that should be integrated if a new vendor is selected. As an internal validity measure, this phase was considered “pattern matching,” identifying the most critical features in a platform for all stakeholders, prior to any demonstrations (Yin, 2018). Our Bachelor of Science in Social Work program had not yet launched, or we would have also surveyed students and/or site supervisors for their feedback or invited them to a demonstration with our top vendor selections.

In interfacing with institutional stakeholders, I noted some unexpected challenges. I experienced a language barrier with legal services, where I had little expertise. Although legal services reviewed contracts for coverage and consistency at the university level, I was responsible for agreeing to some business terms and conditions on behalf of the college. My level of comfort was tested while reviewing and interpreting these contracts. For example, the agreement may describe that “reasonable” efforts will be taken to maintain services according to industry practices. I needed to learn quickly and negotiate standards of what event and response definitions are accepted between parties. It was also important to lean on legal expertise to ensure the contract met Family Educational Rights and Privacy Act (FERPA) regulations, which protect students’ privacy in regards to their records and information. I became aware that all vendors within the university are held to the same standards and strict FERPA guidelines of the university (Rainsberger, 2018). This learning curve added time to the negotiation process.

I had a similar experience in working with the accessibility services team, where specialized language also stretched my existing knowledge. Although I was committed to equity for our online students, I was not fully aware of how equity was represented in digital technology. I learned that there are many levels of accessibility that vary greatly depending on a university’s standards and student populations. As a primarily online institution, my university has set high standards for Web Content Accessibility Guidelines (Web Accessibility Initiative, 2018), which are designed to remove barriers for learning in online spaces. I needed to become knowledgeable about Web Content Accessibility Guidelines and my university’s level of accessibility requirements before speaking with vendors.

Few affordable placement platforms exist that addressed all our preferred needs. I learned that some institutions manage placements through home-grown efforts (i.e., they design their software, often in partnership with their IT staff) or use low-cost proprietary software, such as Google Suite, which is free to use and supports collaboration and data collection through survey-type forms. We considered these options, as well as repurposing institutional resources used already across the school, but this would require significant human resources from my university’s system, which were not available. Without financial support, considering a new platform would not have been worth the time investment, so I talked to our administration team about the financial resources that might be needed. For several reasons, including scalability as we were launching online and across state borders with multiple regulatory complications to manage, I decided to go with a vendor-supported platform, and not develop a system in-house.

I reviewed a broad spectrum of proprietary platforms that offer varying functionality at many price-points. On the low-cost end of the spectrum, software programs such as Intern Placement Tracking (IPT) allow a field office to build a website for form submission (i.e., students and field supervisors complete learning agreements and evaluation forms online, which the field office can download as a spreadsheet) and to manage basic logistics. Larger platforms like Salesforce allow advanced features such as tracking geographic locations of placements and can also generate reminders and alerts for specific student outreach.

I did not limit myself to vendors specifically advertising to social work educational programs; I considered multi-disciplinary vendors and those who manage multiple aspects of a program including assessment and curriculum management. For example, I reviewed the platform E*Medley, which is primarily marketed for health science programs but offers a varied suite of programmatic management options that can be individually tailored. I did not identify a one-size-fits-all platform that I thought would be a perfect fit for every type of field department.

Because my university has multiple departments placing students in internships (teacher education, nursing, counseling, etc.), I connected with those programs to develop a list of institutional requirements and elements that are required across their programs. By combining efforts and selecting a platform together, we could potentially combine our student enrollments and cost-share across departmental budgets. This required careful exploration to determine priorities and to translate functions that were similar across disciplines. Typhon and E*Value were considered, for example. Although they are used primarily by health care professions, they could meet social work placement needs with some modifications. For instance, software may use discipline-specific language, such as “student teacher,” “proctor,” or “preceptors”, and vendors may be able to customize these options for a fee. However, since these platforms serve a wider market, they often provide greater functionality and are sometimes less costly. Multiple colleagues at my university reviewed and contributed to the checklist of recommended criteria along with questions to ask of stakeholders and vendors, which is provided in Table 3. While not exhaustive, this list of criteria and questions provided a solid list of things I wanted to consider in finding the best fit of software for our program.

Table 3: Recommended Criteria With Questions for Selecting Field Placement Software

| Criteria | Questions |

| Costs | •Pricing structure: Based on full-time enrollments? Flat rate fee per user or per student? Annual licenses or lifetime access per license? Are there fees for set-up, one-time, monthly? Training costs? •Any hidden fees for editing forms/reports? |

| Functionality | Does it offer your basic requirements? •Time tracking •Customization for evaluations •Site location database •Field instructor database •Surveying features |

| Access | •Available to multiple stakeholders: Students Administrators Faculty Liaisons Community partners •Can information be shared/restricted based on their assigned roles? •Any firewall/access issues experienced? |

| Legal | Consult your in-house legal team on the following: •Are e-signatures acceptable at your university and compliant with state requirements? •Does it meet FERPA regulations? •Is document retention and storage approved within this proposed platform? •Will there be a storage limit (of size or time) in place by the vendor? |

| Regulations | •Do you need to monitor and maintain state/local/program-specific regulations? •Can it help you meet accreditation needs? •Do you need to track student or agency-specific documents like clearances, immunizations, health screenings, etc.? •How long will the data be maintained in the platform? Lifetime student access to evaluations is preferred due to licensure and state regulations. |

| Accessibility Compliance | Consult your disability services office on the following: •Is the software accessible for all learners according to your university’s standards? •Can it be used with screen-readers or other assistive technology to comply with your accessibility standards? Request a demo account for your accessibility team to test compliance. |

| Data | •Consult your IT dept. to review information security standards. •Is it easy to generate reports for purposes such as program reviews, reaffirmation, and continuous quality improvement? •Who retains ownership of the data? Who else has access to the data? •Where is the data stored? Remotely or in cloud storage?Will this be an expense for the university or on the vendor’s servers? |

| Training | •Will the vendor provide training? Is this included in implementation costs or will this be additional? •Do they have user guides/videos available for each stakeholder to problem solve (students, field instructors, faculty, administrators, etc.)? |

| Technical Support | •Does the vendor have an online help desk or customer support? Are these hours of operation consistent with your time zone and user needs? •Can they provide feedback on client responsiveness? •How quickly are changes implemented? As-needed, quarterly, or based on level of severity? |

| Integration | •Are there other platforms you need this vendor to integrate with? •For example, your institution’s learning management system or other administrative programs like enrollment? |

Adapted with permission from Samuels (2018)

Sifting through complex pricing structures of vendors was difficult, but necessary, to determine the true cost of each product. I learned that most platforms charge rates based on student Full-time Enrollments (FTE), and the contractual fee was based on the number of enrolled students. Some also charge for customization, annual fees, or for on-site training for administrators and faculty. Some companies, like Sonia, charge based on a tiered structure that costs less as student enrollment climbs. Other vendors charge a flat rate no matter how many students utilize the platform, like Tevera, which seemed particularly high at $195 per account, but this cost was quite competitive after the aforementioned factors were considered. Some platforms charge per user – not per student. In the social work placement process, every student has several people associated with their field record, such as a field instructor (potentially an additional task instructor), a field liaison, and an administrator (coordinator, director, faculty leader, or all); thus, per-user fees can add up quickly.

After completing the internal needs assessment, I was better prepared to review demonstrations and compare vendors using similar criteria. Any features vendors presented could be objectively considered based on our checklist. If a novel feature that did not appear on our checklist was able to streamline processes or significantly improve efficiency in some way, it was reflected in our notes and discussed as a team. Researching dozens of field placement software platforms was daunting, as vendors offered different features. The checklist helped me narrow the list of vendors based on the types of products I was seeking and shaped the questions I had during each demonstration.

Selecting a price-point was a complex conversation. Most vendors presented a cost sharing model in which students pay for their own licenses, because this choice does not depend on a new budget cycle and speeds the time to software adoption. Vendors suggested the cost is charged to student accounts as an “internship fee,” or that access codes are purchased through the university bookstore, with an expense burden like that of a textbook. However, because field placement software serves an administrative function and the field experience is already costly (due to background clearances, liability insurance, transportation, possibly time away from work, extra child care costs etc.), we decided to make the argument to leadership that this resource will reduce the administrative burden on the staff. This will ultimately improve operational efficiency and save money over time, and therefore the cost should be shouldered by the department.

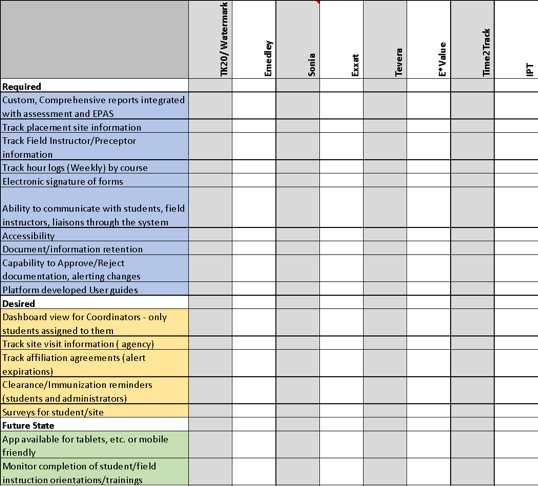

Ultimately, we chose the best software vendor for our context, but had to make some sacrifices. For example, one vendor offered a solution to streamline the student background check process, which allowed the university to cover the cost and maintain digital access to ensure compliance and track background check expirations. The final vendor did not offer this feature. Although this feature was listed as a “desired” item in our spreadsheet (Figure 1), it was not considered as an essential element of our platform. We did request that the final vendor consider adding this feature in their next round of product development.

The vendor we selected charges a one-time lifetime access fee of $195 per student. Although this cost seems high, we decided the benefits and assurances it provides our program is well worth the price. Included in this is the scalability we needed to expand enrollment across multiple states, and constant visibility over the regulatory issues across states. It also provides students with lifetime access to their hours and evaluations that are entered in the system, which not only reduces the administrative tasks of the field department, but also satisfies the many variations of state regulations of documentation retention.

Students also have an added benefit of being able to continue to track their hours in the system post-graduation if they are pursuing licensure. As a new program, it was critical to choose a platform that streamlined and simplified as many processes as possible, and this vendor was willing and able to make adjustments that we needed to immediately implement the platform.

Figure 1: Screenshot of Spreadsheet Used to Help Standardize Reviews

Lessons Learned and Recommendations

Based upon the first author’s leadership in choosing vendors and software to deploy in a field education program, and the lessons learned in that process, the following recommendations are offered to field education offices as they consider their own software needs. It may take a year or more to move from pre-selection to deployment activities, but this thoughtful planning may save future costs and regrets.

Pre-Selection

Because this is a high-stakes decision in terms of money, time, and other resources, field administrators may want to create a decision-making committee, talk with other social work program administrators who have already adopted specific brands of software, and invite company representatives to campus for a product demonstration. Consider appointing an ad-hoc software selection committee to manage the process. This committee can help perform tasks such as developing a checklist of required, preferred, and future software functionality, such as those functions named in Table 2 and Figure 1. Future functions help articulate your desires for future technology upgrades, growth, or even advanced features like a time-tracking app or mobile-friendly features for students’ easy access while in the field. Other future utilities might include monitoring completion of orientations/trainings, or other goals you set as a team. Prior planning helps focus on the elements needed or desired prior to reviewing any products. In this way, you can assess vendors based on essential requirements, and not flashy features that seem exciting during sales meetings but do not meet immediate needs of the field office.

Consider the impact of technology changes on stakeholders. This is a difficult area to quantify, but high-quality software and training is an investment in relationships with community partners. These relationships are important to keep in mind when selecting a field placement software program and is another area that is significant to capture. Oftentimes, field instructors are working with multiple institutions, and must master multiple software programs. Ease of use and on-demand troubleshooting will help reduce friction and keep all partners engaged. When you look at platforms, consider whether they offer training and update those trainings as they make software upgrades.

The committee can also help determine a realistic timeline for selecting and implementing a software platform, and whether a phased or rapid implementation process should be adopted. This team should include representation of various administrative departments with subject matter expertise in regulatory and policy issues that can help inform vendor selection. Additionally, this team can provide information about other relevant software being used within the university by other programs. If it is possible for multiple departments to use one system, it may substantiate investment proposals to leadership (if senior level approval is required in order to adopt a platform within your institution) by creating opportunities to streamline processes, combine budgets, and increase student user counts that may help negotiations with the vendor.

Also ask your team to consider the cost savings of software. Leadership may also be interested to learn ways that software can reduce storage and administrative burdens as students request their final evaluations and time logs for licensure, employment, admissions requirements for master’s programs, or other needs in years to come. Although it can be difficult to quantify the value of time-saving related to these tasks, they can still be shared as potential cost savings in a final proposal.

Plan to request transparency about the complete cost of implementing and maintaining the new system in order to compare accurate price points across vendors. The ancillary expenses beyond student accounts, such as training costs or customization fees, can add up. It is important to clarify the way student accounts, or licenses, are viewed by the vendor. If the vendor uses a per user model, the rate could be 3-4 times student enrollment to pay for user accounts for the field instructor, task instructor, faculty, and field administrators. Another important consideration is whether the fees are annual or include lifetime access. If your program (or programs, if you host both BSW and MSW programs) has several years of internships, annual fees for each student will add up over time. If you host both BSW and MSW students, it may be beneficial to review your retention curves while choosing a vendor. Depending on your attrition rates, it may be particularly cost effective to choose a platform that provides lifetime access, where you purchase the account only once for a student in your program for several years. The decision about how costs will be financed (i.e., in the department budget or as a fee to students) is best made prior to vendor conversations, as the vendors will make a strong pitch to sway your decision in their favor.

It may be helpful to think of ways that the software might serve creative functions throughout the curriculum. For example, if the software hosts a database of all affiliated agency partnerships, could this be used within an assignment where students search for agencies in their area that serve a particular population? Can students identify unmet needs in their community based on this resource? Can they identify an agency that serves a population identified by the Grand Challenges for Social Work or a practice setting identified by CSWE (Barth, Gilmore, Flynn, Fraser, & Brekke, 2014)? Exposing students to this platform early and often can reduce their anxiety in using it when they finally enter field placement.

Sharing details about the thorough screening process is likely to improve buy-in of faculty, administrators, and other stakeholders, and the team may choose some key points early on to report back to administrators or faculty. It may also be necessary to develop a final proposal or presentation to higher administrators if their approval is needed to proceed with procurement. This proposal would likely include any data to support the problems/concerns with the current processes, the needs assessment checklist, a deployment timeline, and the anticipated impact on students and field sites. It may also be wise to emphasize issues that are most important to this audience, including efficiency, compliance, accreditation risks, or impact on the students’ and field sites’ experiences. The costs and potential cost savings should also be shared, describing the two or three vendors in your final choices, and what reasons justified your final selection.

During Selection

As the selection team begins talking to vendors, the authors recommend interviewing each one prior to setting up a demonstration to collect information about their ability to meet your basic requirements as listed in your checklist. If the vendor meets the required checklist items, then proceed with scheduling meetings and demonstrations, with recordings of the presentation saved for the team to document and revisit as needed.

Many vendors will request specific information about your program to inform their sales strategy. Before providing specific details (such as program size or the number of affiliation agreements you maintain), consult your legal team and request a Non-Disclosure Agreement (NDA) between parties. This helps protect your conversations so the vendor cannot share your proprietary information with competing programs. Following initial demonstrations, you may want to create a list of vendors based on their performance in the checklist, and after securing an NDA, share your list of needs with them and request that they prepare a “proof of concept” proposal for your program, describing how their software meets your needs and outlining costs.

Expect ongoing communication with university stakeholders to keep you up to date on changes to regulatory standards, legal or institutional regulations related to privacy and confidentiality standards, accessibility and accommodation policies, and other issues that influence software decisions. It is helpful to know if a vendor meets the minimum standards early in the process. In order to satisfy stakeholders, they may need access to a demo-account (or similar alternative). For example, the accessibility team at our school needed to test the use of screen-readers and other assistive technologies within the platform to confirm compliance. Vendors may be unwilling or unable to provide these options, and this may inform vendor selection.

If your program meets primarily face-to-face in a traditional classroom and is regional, your university may not set high standards for Web Content Accessibility Guidelines (Web Accessibility Initiative, 2018), which are designed to remove barriers for learning in online spaces. If your university is online or hybrid, there is likely already a set of standards required from vendors. Knowledge about Web Content Accessibility Guidelines, and your university’s level of accessibility requirements, can help you decide which vendors to explore. Adding “accessibility” to your checklist is important, but additional details are needed when communicating with vendors. Although many vendors promise that their platform is accessible, be sure to have your in-house accessibility services review each platform to confirm compliance. Our professional ethics urge us to make decisions in consideration of the most vulnerable, but the platforms that meet Web Content Accessibility Guidelines (Web Accessibility Initiative, 2018) latest standards can be expensive for some programs. For this reason, the authors recommend choosing a platform that meets at least minimum institutional needs in collaboration with your disability services office.

Upon Selection

Once the ad-hoc committee selects their top platform choices, it may be useful to further vet them by contacting references or other universities who use the software. Asking the vendor directly for contact information for schools of similar size may be possible. It is also common to discuss software experiences through field directors’ listservs, which may be a particularly helpful place to gather information about why an institution did or did not select a specific vendor.

It will be important to map out the institutional resources you will need for implementation support such as IT or classroom operations, as you will need to train and support students, faculty, and field educators on this new system. A “user friendly” experience for all stakeholders, but particularly field and/or task instructors, will improve buy-in. A brief and easily accessible training program for field/task instructors will be needed. It is preferable for the vendor to create these user guides and materials for you to refer to, and as the software changes over time, and they should be responsible for continuously revising the content.

After Selection

Following approval from leadership, a thorough change management strategy should be implemented to phase in the new platform. This strategy should identify all areas that will be updated to accommodate the new platform (curriculum, handbooks, orientations etc.) and create a plan to address these within the timeline. A phased implementation approach may be adopted to pilot test the system and make adjustments to minimize the impact on students and community partners.

Customizing the software platform for your institution will be an iterative process and will require ongoing communication and testing. The vendor should have a list from you of all the documents and processes that need to be integrated in the system. The field office must then consider how to deploy training. The vendor may be able to provide some initial training, but the field office will likely be responsible for the bulk of it. The authors recommend working with instructional designers to create content and/or online modules and consider conducting off-site training in agencies if possible. Your program might consider highlighting the opportunity for the development of digital literacy and ethical practice with technology as outlined in the NASW (2017b) Standards for Practice with Technology and the updated NASW (2017a) Code of Ethics, which adds value to the technical components of software training. The team may also want to develop a process for assessing and tracking technical support needs and vendor questions, as well as a continuous quality improvement process that covers software use and training.

Conclusion

As the signature pedagogy of social work education, field education may be the most important and complicated aspect of program development. Effectively managing complex administrative processes is a priority to ensure program and student success, and ideally creates space for field education faculty and staff to focus on the pedagogy and social work tasks associated with field education. As programs and tracking requirements grow in complexity, it is important to find a platform that is the ideal match for a program. It takes time, collaboration, and strategic planning to implement a new software platform. Ultimately, it can significantly improve the experiences for all stakeholders.

The development of a thorough, cross-departmental, and interdisciplinary needs assessment was critical to defining the problems to be solved by a software platform in the case study offered in this article. Articulating the needs, gaps, and risks associated with the current processes also helped garner support from leadership, both in terms of resources and funding. Without this thorough software review process, which included considerations of the monetary impact on the department, choosing a commercial software would not have been a worthwhile time investment. In the end, the first author’s school chose Tevera, which the authors share because it is a common question. However, this would not be the best fit for every school, and the main contribution of this article, the authors hope, is helping readers make an independent decision based on the needs of their own programs.

Access to technology, tools, and resources that manage the complexity of field placement can alleviate some administrative burden and ultimately result in cost savings through improved efficiency. While streamlining processes and centralizing resources, field educators can re-allocate their time to student-centered initiatives, as well as networking and nurturing relationships with community partners.

Implications for Social Work Field Education

Social work field educators have an important role in the incorporation of technology into the administration of social work educational programs. By selecting and implementing field placement software, field educators can help social work programs collect vast amounts of data about both the process and outcomes of field education, from the characteristics of quality field placements to student learning outcomes. The ability to collect data provides an opportunity to answer important research questions about the social work educational process that can inform pedagogical and administrative strategies. Big data practices combined with data and/or predictive analytics have the potential to allow the field to incorporate evidence-informed practices in social work education (Coulton, Goerge, Putnam-Hornstein, & de Haan, 2015; Robbins, Regan, Williams, Smyth, & Bogo, 2016), and improve the knowledge base regarding what constitutes successful placement experience in areas where research is lacking (Dill, 2017).

Further, social work field educators are now in a unique position to influence the digital proficiency of the profession by working in the spaces between the university and community practice. By effectively introducing digital technology into the field placement process, field educators are essentially educating both future and current social work professionals about the knowledge, skills, and values needed to competently and ethically work in digital spaces. Part of this approach includes adopting the frameworks of digital literacy and ethical practice into the selection, design, and implementation of technology in field education programs (Hitchcock et al., 2019; NASW 2017a, 2017b). Along with institutional and professional guidelines (NASW, CSWE, etc.), field educators may want to consider ethical practices outside of the United States such as the European Union’s General Data Protection Regulations when incorporating technology into field education (European Commission, n.d.), as well as any local regulations, such as the California Consumer Privacy Act of 2020 (Metayer, 2019). As the signature pedagogy in social work education, field educators using digital and social technologies within their programs are well-positioned to move the profession into the 21st century.

References

Barth, R. P., Gilmore, G. C., Flynn, M. S., Fraser, M. W., & Brekke, J. S. (2014). The American Academy of Social Work and Social Welfare: History and grand challenges. Research on Social Work Practice, 24(4), 495–500. doi:10.1177/1049731514527801

Buck, P. W., Bradley, J., Robb, L., & Kirzner, R. S. (2012). Complex and competing demands in field education: A qualitative study of field directors’ experiences. Field Educator, 2(2), Retrieved from http://fieldeducator.simmons.edu/article/complex-and-competing-demands-in-field-education/

Buck, P. W., Fletcher, P., & Bradley, J. (2016). Decision-making in social work field education: A “good enough” framework. Social Work Education: The International Journal, 35(4), 402–413. doi:10.1080/02615479.2015.1109073

Coulton, C. J., Goerge, R., Putnam-Hornstein, E., & de Haan, B. (2015). Harnessing big data for social good: A grand challenge for social work (White Paper No. 11). Baltimore, MD: American Academy of Social Work & Social Welfare. Retrieved from https://grandchallengesforsocialwork.org/wp-content/uploads/2015/12/WP11-with-cover.pdf

Council on Social Work Education. (2008). Educational policy and accreditation standards. Retrieved from https://www.cswe.org/getattachment/Accreditation/Standards-and-Policies/2008-EPAS/2008EDUCATIONALPOLICYANDACCREDITATIONSTANDARDS(EPAS)-08-24-2012.pdf.aspx

Council on Social Work Education. (2015). Educational policy and accreditation standards for baccalaureate and master’s social work programs. Retrieved from https://www.cswe.org/getattachment/Accreditation/Accreditation-Process/2015-EPAS/2015EPAS_Web_FINAL.pdf.aspx

Council on Social Work Education. (2018). 2017 statistics on social work education in the United States. Retrieved from https://www.cswe.org/Research-Statistics/Research-Briefs-and-Publications/CSWE_2017_annual_survey_report-FINAL.aspx

Dill, K. (2017). Field education literature review: Volume 1. Field Educator, 7(1). Retrieved from http://fieldeducator.simmons.edu/article/field-education-literature-review-volume-1/

European Commission. (n.d.). Data protection. Retrieved from https://ec.europa.eu/info/law/law-topic/data-protection_en

Hitchcock, L. I., Sage, M., & Smyth, N. J. (2019). Teaching social work with digital technology. Alexandria, VA: CSWE Press.

Hunter, C. A., Moen, J. K., & Raskin, M. S. (2016). Social work field directors: Foundations for excellence (revised ed.). New York, NY: Oxford University Press.

Metayer, J. (2019, March 26). What does the CCPA mean for colleges and universities? International Association of Privacy Professionals – The Privacy Advisor, Retrieved from https://iapp.org/news/a/what-does-the-ccpa-mean-for-colleges-and-universities/

National Association of Social Workers. (2017a). Code of ethics. Retrieved from https://www.socialworkers.org/About/Ethics/Code-of-Ethics/Code-of-Ethics-English

National Association of Social Workers. (2017b). NASW, ABSW, CSWE & CSWA standards for technology in social work practice. Retrieved from http://www.socialworkers.org/includes/newIncludes/homepage/PRA-BRO-33617.TechStandards_FINAL_POSTING.pdf

Rainsberger, R. (2018). The top 5 sections of the FERPA regulations: Consider how FERPA applies to contractors. The Successful Registrar, 18(3), 1–3. doi:10.1002/tsr.30464

Robbins, S. P., Regan, J. A. R. C., Williams, J. H., Smyth, N. J., & Bogo, M. (2016). From the editor—The future of social work education. Journal of Social Work Education, 52(4), 387–397. doi:10.1080/10437797.2016.1218222

Samuels, K. (2018, September 7). Student placement software for the social work field office: Goodbye post-it notes! [Blog post]. Retrieved from https://www.laureliversonhitchcock.org/2018/09/07/student-placement-software-for-the-social-work-field-office-goodbye-post-it-notes

Schramm, W. (1971). Notes on case studies of instructional media projects. Stanford, CA: Stanford University, Institute for Communication Research. Retrieved from https://files.eric.ed.gov/fulltext/ED092145.pdf

Web Accessibility Initiative. (2018). Web content accessibility guidelines (WCAG) overview. Retrieved from https://www.w3.org/WAI/standards-guidelines/wcag

Wertheimer, M. R., & Sodhi, M. (2014). Beyond field education: Leadership of field directors. Journal of Social Work Education, 50(1), 48–68. doi:10.1080/10437797.2014.856230

Yin, R. K. (2018). Case study research and applications: Design and methods (6th ed.). Los Angeles, CA: SAGE Publications, Inc.