Abstract

Field education is considered the signature pedagogy of social work education and the fundamental location for the implementation of learning into practice. Preparing students for the field is paramount to their success. This paper explores the use of field labs in combination with simulation conducted in a controlled environment outside of the classroom to prepare social work students for their first field placement. Students participating in the program (N = 22) completed both a pre- and post-assessment of their knowledge of safety as measured on an objective exam and self-estimate of counseling skills as measured on the Clinical Self Estimate Inventory. Results of a series of paired-samples t-tests with a Bonferroni correction indicated that knowledge and self-estimate of these skills had statistically significant increases (p < .007), supporting the concept of field labs in conjunction with simulation as valuable tools in preparing social work students for entrance into field education.

Keywords: field education; simulation; field seminar; social work education

Introduction

Field education is considered the signature pedagogy of social work education and the essential, fundamental location for student integration of classroom knowledge with practice. Shulman (2005) defines signature pedagogy as “types of teaching that organize the fundamental ways in which future practitioners are educated for their new professions” (p. 52). How best to support this integration of theory and practice in field education remains a longstanding debate in social work education. According to the Council on Social Work Education (2015; CSWE), competency-based education is “a framework where the focus is on the assessment of student learning outcomes (assessing students’ ability to demonstrate the competencies identified in the educational policy) rather than on the assessment of inputs (such as coursework and resources available to students)” (p. 20). Students must demonstrate mastery of these competency-based performance expectations to complete graduate social work programs, naturally elevating the importance of field education in social work graduate education. These high-stakes, competency-based performance expectations can create student anxiety that inhibits or impairs performance or creates other forms of student distress (Bogo, 2015), and places additional demands on field instructors (Kourgiantakis et al., 2018). In contrast, research suggests that supportive learning environments may better prepare students for the field, while simultaneously mitigating their competency-related anxieties and stress (Bogo, 2015).

Using traditional in-class role-playing exercises as a precedent, structured simulation is conducted with trained actors portraying standardized clients in a controlled environment outside of the classroom setting. This paper describes a preliminary exploratory study of the use of simulation, a teaching tool utilized to replicate practice situations in a realistic way by using trained actors, and field labs to enhance preparation, practice, and learning among foundation-year Master of Social Work (MSW) students (N = 22). Within the confines of this study, there were two main hypotheses:

- that overall student knowledge as it pertains to safety and engagement would increase from the start of the field labs to conclusion of the field labs; and

- students’ belief in their abilities as reported by the counselor self-estimate inventory would increase from the start of the field labs to the conclusion of the field labs.

Literature Review

In 2008 and 2015, the CSWE published the Educational Policy and Accreditation Standards (EPAS), which described the importance of field education to social work education curriculum and defined field education as the signature pedagogy of social work education. In the case of social work education, the term signature pedagogy denotes field education as the place where classroom learning intersects with social work practice. Field education introduces students to the realm of professional practice and offers them the opportunity to apply new skills and demonstrate competencies. Intentionally designed field education involves instruction both inside and outside of the school. Students are supervised by trained instructors, and their practice is subject to rigorous evaluation in terms of competency acquisition (CSWE, 2008; CSWE, 2015).

By declaring field education to be the signature pedagogy of social work education and reinforcing mandated competencies, CSWE (2008 & 2015) standards further defined both specific learning outcomes for social work students and standardized expectations for field partners concerning student performance. As a result, field education has shifted away from apprenticeship models, wherein a student learned one social worker’s job, toward a more innovative model devoted to competency-based field education (Wayne et al., 2010).

MSW students are expected to begin field education with the ability to acquire and demonstrate essential knowledge, skills, behaviors, and with cognitive-affective processing capabilities, thus allowing developmental progression toward mastery of specific competencies and practice behaviors. Competency-based field education requires that students demonstrate integration of classroom knowledge with application of skills and behaviors during the field education experience (CSWE, 2008, 2015; Shulman, 2005; Wayne et al., 2010).

High-stakes expectations associated with signature pedagogies and competency-based field education can result in anxiety among students (Shulman, 2005; Hemy et al., 2016), and unmanaged anxiety can result in student distress and may stifle cognitive processing and/or impair performance (Baird, 2016; Kanno & Koeske, 2010; Gelman, 2004; Gelman & Lloyd, 2008). Previous research has found that foundation-year MSW students describe lack of preparation, skills, and experience as their greatest concerns when entering into practicum agencies (Asakura et al., 2018; Kanno & Koeske, 2010; Gelman, 2004; Gelman & Lloyd, 2008). Identifying, understanding, and managing students’ concerns and anxieties allows educators to cultivate and structure learning environments in ways that better prepare, support, and educate students for field practice (Hay et al., 2016; Shulman, 2005). Field education also relies heavily on volunteer field instructors who are not necessarily employed by or embedded into schools of social work but instead are working in the community as social workers. The shift to high-stakes, competency-based field education has put new pressures on field instructors to guide students toward the integration and application of classroom knowledge and to provide ongoing feedback to students to support the acquisition and mastery of practice skills and competencies (Kourgiantakis et al., 2018).

Several methods to support student integration of theory with practice and demonstrate competency exist in the literature. These include field seminars (Birkenmaier, et al., 2003; Fortune et al., 2018; Poe & Hunter, 2009; Spira & Teigiser, 2010), specialized courses such as capstones (Schneller & Brocato, 2011), assignments embedded within particular social work courses (Dettlaff & Wallace, 2002; Lay & McGuire, 2010; Lee & Fortune, 2013), or enhanced training of field instructors (Deal et al., 2011).

The most common strategy, however, has been to implement seminars in response to student anxieties and to better support classroom-to-practice integration (Birkenmaier et al., 2003; Brown et al., 2002; Clapton et al., 2006; Fortune et al., 2018; Poe & Hunter, 2009; Spira & Teigiser, 2010). Field seminars, categorized as class time or meetings with other students and a field faculty member, typically occur during the same semester(s) that students are placed in practicum, and while variance exists in terms of the structure, design, and goals of field seminars, identifiable common themes of field seminars include identifying practice tasks that demonstrate mastery of program competencies (CSWE, 2015); the use of reflective assignments and discussion in order to inform professional identities; the provision of opportunities for application of the social work professional code of ethics (National Association of Social Workers, 2018); and the promotion of professional behaviors (Dalton, 2012; Fortune et al., 2018; Poe & Hunter, 2009). The experience and value of being supported by and learning from peers has also been identified as a benefit of field seminars (Ben-Porat et al., 2019; Teigiser, 2009). A recent study comparing experiences of students placed in a field seminar with those who were not concluded that student participation in a field seminar was associated with higher levels of satisfaction with their field education experience, identification with the profession of social work, and critical thinking skills (Fortune et al., 2018).

At the time of this paper, review of the literature revealed scarce research demonstrating the use of simulation in a field seminar or to prepare students for field education (Asakura et al., 2018; Kourgiantakis et al., 2018). In a 2018 study, 46–68% of first-time practicum students who reported anxiety over a perceived lack of preparedness benefited from practice courses and simulation prior to placement (Asakura et al., 2018; Wilson et al., 2013). Additional studies uphold simulation as a reliable evaluative tool to gauge students’ readiness for placement (Badger & MacNeil, 2002; Bogo et al., 2011). Finally, last year research has begun to demonstrate the potential benefits of simulation in the clinical preparation of social workers (Kourgiantakis et al., 2019).

While simulation as a teaching tool has historically been focused in medical and allied health education, involving high-technical or high-fidelity mannequins and task trainers as well as interpersonal skills, social work education has increasingly embraced simulation as a form of education and training (Bogo et al., 2011; Bogo, Rawlings et al., 2014; Bogo, Shlonsky et al., 2014; Duckham et al., 2013; Logie et al., 2013; Lu et al., 2011; Miller-Cribbs et al., 2017; Sacco et al., 2017; Wilcox et al., 2017). The pioneering work of Bogo and colleagues (2014) provides detailed insight into the design, implementation, and use of simulation for reliable assessment methods for the evaluation of social work practice competencies. Simulation, they argue, has the potential to push assessment of student learning beyond the course and into the field. Using the medical model of an objective structured clinical examination (OSCE), they provide evidence of how to use simulation to test competencies in social work education. The study goes on to highlight the use of simulation for both formative and summative assessment; this is a particularly useful tool, considering the ability of a given simulation to be attenuated to developmental levels. Simulation may also simply provide opportunities for experiential learning, the development of social empathy, or feedback and practice (Bogo et al., 2014).

The term simulation is defined as an educational tool that involves the simulation of interactional, therapeutic, or interpersonal skills using trained actors to play a variety of roles including client, patient, community member, provider, or other role depending on the scenario—scenarios which can include individual, family, team, or group experiences across a variety of contexts and disciplines (Miller-Cribbs et al., 2017). Simulation allows for the creative use of facilities to best fit the contexts in which social workers and other trainees practice. This can include a home/apartment environment for home visits, typical therapy rooms, or outpatient and inpatient clinic rooms. It can also involve sophisticated technology that allows for observation of learners by faculty and peers, for annotation of videos either live or recorded, and for recording of performances or other data in addition to data entry into pre-determined rubrics by the observers.

The most impactful simulation experiences frequently involve debriefing and feedback, often with video review (Bogo, Rawlings et al., 2014). Simulation experiences are designed to promote, develop, reinforce, and/or test key skills of a profession. Previous research indicates that simulations as a learning tool have been linked to outcome variables such as increases in student self-confidence and capacity to link knowledge with skills (Bragg, Nay, Miller-Cribbs & Munoz, 2020;Bragg, Kratz, Nay ,Miller-Cribbs, Munoz, & Howell, 2020; Clapper, 2010; Costello et al., 2017; DeBenedectis et al., 2017; Issenberg et al., 2005; Jelley et al., 2016; Leake et al. 2010; Manning et al., 2016; Nimmagadda & Murphy, 2014; Wen et al., 2017; Yule et al., 2006)

The strengths of using simulation in social work education include the following factors: (a) it is compatible with principles of adult learning and professional, competency-based education; (b) it provides opportunities for the practice of skills in a realistic yet controlled environment; (c) simulation activities can be adjusted to the developmental levels of learners and can be used longitudinally to assess student progress; (d) while the predominate use of simulation has been to focus on clinical skills, it can also be used to practice macro social work skills such as advocacy, administration, or research-based skills; (e) it can be used for both formative (low stakes) and summative (high stakes) assessments of learners; (f) it can provide opportunities for multi-source feedback and self-reflection; (g) issues related to social justice can be integrated into simulation scenarios; and (h) it can be used in interprofessional education (Bernstein et al., 2002; Clapper, 2010; Costello et al., 2017; Doel & Shardlow, 1996; Jelley et al., 2016; Logie et al., 2013; Manning et al., 2016; Nimmagadda & Murphy, 2014; Rudolph et al., 2008; Wilcox et al., 2017).

Methods

Upon receiving IRB approval, students enrolled in the foundation-year field units were asked to participate in an ongoing educational assessment of the field labs and simulations in social work education. Students who agreed to participate were evaluated via a single-group, pre- and post-approach with the outcome measures of simulation exposure including a knowledge evaluation and the Counseling Self-Estimate Inventory (CSEI; Larson et al., 1992). Students were evaluated once prior to the start of the field lab (see following subsection for an outline of specific activities of the field lab) and once again upon completion of the simulation debriefing (at the end of the field lab).

Field Labs Overview and Design

The foundation-year field labs (seminars) were first implemented in response to feedback from graduating MSW students, and were reinforced in recommendations that stemmed from focus groups with field instructors concerning the need for more preparation and advanced practice skills among foundation-year students. The initial work was reevaluated, developing into the field lab and activities described and assessed in this paper.

Whereas social work field education literature uses the term “seminar” to describe a variety of classroom meeting formats that coincide with or are embedded within field education courses, within the context of this university, the term “field labs” is used in the same manner. The structure of the field labs was built around course times, allowing for students to have a midweek schedule. Foundation-year field education placements were confirmed during the summer term preceding the fall/spring field education period. Students spent their first two weeks of the fall term in seminar, delaying the start date at their designated practicum agency until the third week of the fall semester. Full-time students and part-time students who resided within 30 miles of campus were required to attend field labs and complete online modules.

The field labs were designed to prepare MSW students for field education experiences and supervised social work practice. The curriculum provided rich discussion on a variety of topics (i.e., practicum expectations, the role of empathy, ethical decision making, self-care, safety, etc.) and opportunities to integrate knowledge with practice through the use of decision case–based learning, peer learning and feedback, process discussions, and the use of simulations. The field labs aimed to provide students with the wisdom and awareness to aid their continued development and competency growth as well as to cultivate their self-awareness and increase their confidence as students beginning social work practice.

The university’s social work field education faculty administered the field lab curriculum through lectures, in-person activities and discussions, and online modules. After the completion of these didactics, students participated in two simulations. The overall course content and schedule is described in Table 1.

Table 1: Course Content by Day

Course Assignments

In-Person Didactics and Activities

Students met in a classroom on a total of four different days throughout field labs, covering several topics (see Table 1). Activities included completing worksheets, small- and large-group discussions, reading and discussing decision cases, watching short videos, reading chapters from the required documentation textbook (Sidell, 2015), and participating in small group activities.

Online Modules and Quizzes

Students completed online modules and quizzes through the school’s online learning management system. In total, the modules took approximately eight hours to complete and introduced the following topics: engagement, active listening, nonverbal communication and observation, the art of questioning, and Motivational Interviewing. Content was introduced via PowerPoint lectures, videos, and informational handouts. Students completed a quiz following each module before moving forward.

Simulations

Following the didactic portion of the field labs, students participated in two simulations: (a) a home-assessment and (b) a biopsychosocial assessment interview. Both simulation scenarios addressed competencies set out by CSWE (2015), including ethical and professional behavior; engaging diversity and difference in practice; and engaging, assessing, intervening, and evaluating with individuals. Students actively participated in their own simulation scenarios and peer observations of the simulations in real time. The schedule was designed to allow each student to conduct a simulation with one scenario and then observe a peer with a similar client. After participating in and observing the simulations, students debriefed as a group. Each student received feedback from the instructors, fellow students, and the standardized client. Students also engaged in their own self-evaluation process post-simulation.

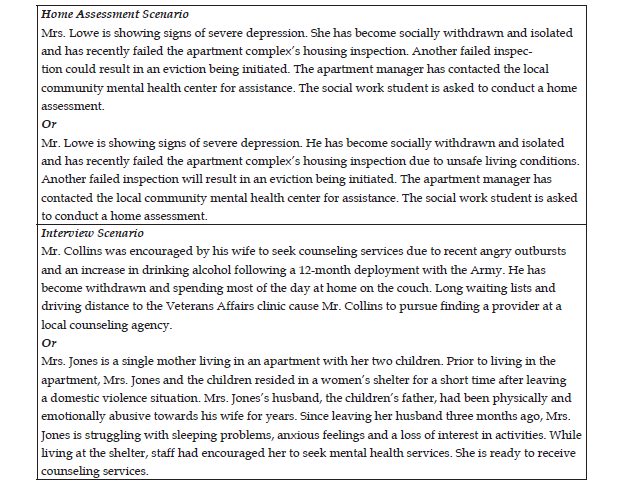

The home-assessment simulation scenario was developed to prepare students for the field. Many students provide home-based services during their field education, necessitating that students be equipped with the proper skills to ensure safety and efficiency in a client’s home. This simulation was designed to enhance students’ knowledge and abilities in assessing clients in the home environment. Students gained entry into the apartment by identifying as a student intern with the local community mental health center. Once inside the apartment, students conducted a safety assessment and formulated a mutually agreed-upon plan with the client.

The biopsychosocial-assessment simulation scenario aimed to provide students with an opportunity to practice and enhance basic social work interviewing skills. Most students will conduct interviews with clients during their field education, making it necessary to acknowledge best practices and equip students with the engagement skills necessary to effectively interact with clients and gather appropriate information. This simulation was designed to enhance students’ knowledge and abilities in interviewing and assessing client needs through conducting a brief 15-minute interview assessment in order to gather information about the client. Students were to identify the biological, psychological, and social factors that may have been contributing to the client’s problems. Brief descriptions of the scenarios are provided in Figure 1.

Figure 1: Simulation Scenario Descriptions

Participants

Participants consisted of foundation-year MSW students (N = 22) preparing to enter field education in the fall semester. All students enrolled in the field lab agreed to participate in the overall evaluation and completed both the pre- and post-assessments. Participants ranged in age from 21 to 54 years, with a mean age of 30.23 years (SD = 9.34) and were predominately female (86.4%). To preclude identification of individual participants by small cells, race has been collapsed into white and non-white for analysis. A breakdown of participant demographics is illustrated in Table 2.

Table 2: Demographics of Students Participating in Educational Assessment

Measures

Knowledge

To assess gains in student knowledge concerning safety awareness and engagement, an objective test was required with correct and incorrect answers opposed to a subjective measure. Within the confines of this study, safety was defined as the students’ level of awareness, assessment, anticipation, and action to the given situation. In addition, engagement was defined as the students’ ability to not only anticipate escalation in a crisis, but their ability to actively work towards de-escalation of the situation. As such, a 10-item test with multiple choice and true/false-style questions was designed by professionals with extensive experience in both social work practice and field education. In an attempt to diminish the students’ ability to answer questions correctly with commonly held knowledge, the test’s degree of specificity closely pertained to the didactic portion of the field labs. Possible scores ranged from 0 to 10, with each item worth one point for a correct answer, and higher scores indicating greater knowledge of the subject matter. Content expert reviews and piloting among MSW graduates and advanced MSW students helped increase the content validity of the knowledge measure.

Counselor Self-Estimate Inventory (CSEI)

Extensive research has been conducted on the relevancy of self-efficacy and its relationship to counseling. This research includes positively associating counseling self-efficacy with counseling competency, self-esteem, confidence in the ability to solve problems, remaining present in the moment, having empathetic feelings towards others, and experiencing decreases in state and trait anxiety (Lannin et al., 2019). Designed as a self-assessment of counseling skills, the Counselor Self-Estimate Inventory (CSEI) consists of 37 items, each on a six-point Likert scale. Counseling self-efficacy is defined as “one’s beliefs or judgments about her or his capabilities to effectively counsel a client in the near future” (Larson & Daniels, 1998, p. 180). Within the CSEI are subscales measuring micro-skills or direct practice interviewing skills (MS); process skills, or one’s ability to process information and respond accordingly (PS); difficult client behaviors (DCB); cultural competency (CC); and awareness of values (AV). Possible scores on the CSEI range from 37 to 222, with higher scores indicating greater confidence in one’s counseling abilities. Questions within the CSEI include, “I am confident that I can assess my client’s readiness and commitment to change,” and “When using responses like reflection of feeling, active listening, clarification, probing, I am confident I will be concise and to the point.” Previous research using the CSEI has demonstrated that the scale has acceptable reliability (α = .93; Konzina et al., 2010). Additionally, the reliability of the CSEI in the current study was acceptable in both pre-assessment (α = .950) and post-assessment (α = .961).

Data Analysis

All surveys were matched and entered into IBM SPSS (version 25) for data analysis. With only two points in time and the understanding from learning theory that knowledge and skill self-estimates would likely only increase after participation in the field labs (as opposed to decreasing), a series of one-tail dependent (paired-samples) t-tests were conducted.

Bonferroni Corrections

As is well known in the literature, whenever multiple statistical comparisons are made using data from the same set, the possibility of a Type I error increases (Bonferroni, 1936; Haynes, 2013). A Type I error is colloquially known as a false positive, which substantially increases in likelihood as more statistical tests are performed on the same dataset (Bonferroni, 1936; Haynes, 2013). A Type I error occurs as a statistical artifact, not because the relationship is present in the population.

A Bonferroni correction (Bonferroni, 1936; Haynes, 2013) was developed to adjust for increases in the likelihood of false positives that stem from running multiple statistical tests on the same dataset. Bonferroni corrections operate by lowering the probability threshold under which relationships are judged statistically significant. By making the test for statistical significance more stringent than the standard p < .05, the likelihood of false positives is dramatically reduced (Bonferroni, 1936; Haynes, 2013). The adjusted standard for significance is accomplished by dividing the p-value of 0.05 by the number of multiple tests a researcher is conducting on the same data set, which in the current case was .05/7 tests. The result was an adjusted significance threshold of p < .007, significantly more conservative than the typical .05 threshold. In other words, variable relationships were judged as significant only when their p < .007. For completeness, the p values for variable relationships are displayed for both the liberal p value standard of p < .05, and for the more stringent adjusted standard of p < .007.

Results

Prior to analysis, assessment of the data indicated no outliers and an approximately normal distribution (Shapiro-Wilk p > .05). Once normality assumptions were met, we moved on to analyze change linked to intervention exposure.

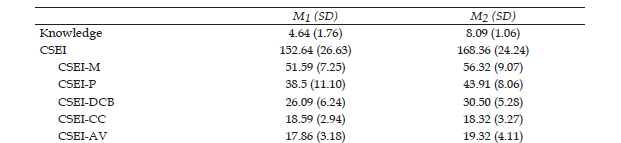

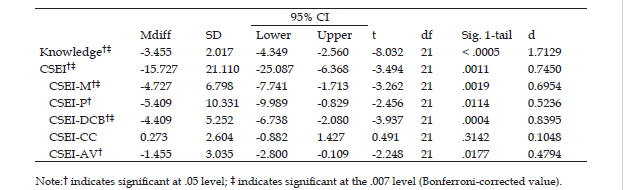

Due to the fact that many educators are unfamiliar with the Bonferroni correction to account for Type I errors, the results are reported at both the .05 (uncorrected) and .007 (corrected) cutoff values. First, without the Bonferroni correction, the following measures had statistically significant increases (p < .05): (a) knowledge of safety and engagement; (b) aggregate CSEI scores; and (c) the individual subscales, with the exception of cultural competence (p = .314), which was expected due to the simulation not being designed to address cultural competency (sometimes referred to as cultural humility). Taking the more conservative approach with the Bonferroni-adjusted critical value (p < .007), results indicated a statistically significant increase in knowledge of safety and engagement (M1 = 4.64 to M2 = 8.09), aggregate CSEI scores (M1 = 152.64 to M2 = 168.36), the DCB subscale (M1 = 26.09 to M2 = 30.50), and the MS subscale (M1 = 51.59 to M2 = 56.32)—with significant changes having medium (d > .50) to large (d > .80) effect sizes.

These results suggest that field labs, in combination with simulations, increase student knowledge of safety and engagement (p < .007) and confidence in overall counseling skills (p < .007), micro-skills (p < .007), and dealing with difficult client behaviors (p < .007). Without using the Bonferroni correction, the results also suggest there were significant increases in processing skills (p < .05), and awareness of values (p < .05) as measured by the CSEI prior to beginning field education. A complete description of the results is provided in Tables 3 and 4 with both the uncorrected (.05) and the corrected (.007) p values.

Table 3: Descriptive Statistics of Participants’ Pre-Post Scores

Table 4: Dependent Samples T-Test Results

Discussion

As indicated, from pre- to post-administration, students’ knowledge relating to safety and engagement and counseling skills showed statistically significant increases. Results from this study support the hypothesis that brief didactics and simulations have the potential to increase student knowledge and skill self-estimates among MSW students entering field education. The findings have promising implications for the preparation of students for field education, as the strongest results indicate that participation in the field labs and simulations were associated with an increase in knowledge acquisition, overall student-rated counseling self-efficacy, and the subscales of difficult client behaviors and micro-skills.

Previous research indicates that foundation year students have concerns regarding lack of preparation, skills, and experience before beginning practicum, concerns that potentially result in unregulated anxiety and impairment to performance (Gelman, 2004). This research suggests that field labs and seminars that include strong didactic training and simulation provide structured and protected learning environments for students to readily increase their knowledge and embrace skill development, which naturally leads to improved counseling self-efficacy and increased confidence in abilities regarding social work practice. As indicated in previous research, the majority of social work students report “anxiety related to their lack of knowledge and skills to work with clients” (Asakura, 2018, p. 398). Students who feel prepared and confident will experience manageable anxiety levels and will be better positioned for practicum readiness. Students with clinical confidence identify as being better prepared for practicum, and may be more likely to not only engage in practicum activities, but to seek out practice opportunities and experience greater autonomy in practicum, promoting mastery of the identified CSWE (2015) EPAS competencies. These outcomes suggest that field education programs can utilize simulations in a variety of ways to train and prepare students for and during field placement.

Limitations

One limitation relates to the small sample size and the demographic characteristics of this initial evaluation. As noted, every student participant in the field lab simulation participated in the evaluation, which resulted in 22 students. Within this small sample there were more females than males, and an inability to break the non-white category down due to small cell size, which could lead to problems with anonymity; therefore, generalizability is limited. Further, data following student performance into field was not conducted for this study, so it is unknown if these field labs enhanced student performance in field.

Another limitation relates to the use of self-report measures. Within this study the CSEI was used to measure self-efficacy, which is based on Bandura’s (1997) work in self-efficacy. There is an abundance of work highlighting the relevancy of self-efficacy to one’s ability to work in counseling (as previously indicated). However, within research there is still a great deal of hesitation in using self-report measures out of fear of distortion (Bogo, 2015).

Finally, the lack of a control group limits the ability to confirm results. All students entering their first field placement were enrolled and completed the field lab, which included all components from didactics to simulation. Therefore, one cannot state if these students performed better than counterparts at other institutions who did not participate in a field lab prior to placement.

Implication for Social Work Education and Practice

The preliminary results of this study have the potential to shape the direction of development in field education in social work. The use of simulation allows students to hone important skills in a controlled environment, prior to implementing these skills in a professional setting. Social work has long embraced role play; however, there is less standardization in role play when other students are used as clients. Simulation offers a standardized, safe environment in which to practice, and, as this research demonstrates, allows students to have a better sense of their capabilities prior to beginning their first field placement.

To further understand the relationship between simulation and practice, continued research is underway that will add to these preliminary findings. First, we will continue to collect pre- and post-assessments from student participants in the field labs, as well as continue to record simulations in accordance with the simulation centers’ policies. This will provide opportunities for the analysis of qualitative comments from students regarding the field lab experience, and also provide multiyear data. Second, we will link an observable-skills checklist completed by faculty reviewing the simulation videos with increases in students’ knowledge and self-estimate of skills from the pre- and post-assessments.

Having a better understanding of what can be done to support and better prepare students to succeed in field education will allow the profession to develop best practices. These best practices can then be implemented across the curriculum in CSWE-accredited programs.

References

Asakura, K., Bogo, M., Good, B., & Power, R. (2018). Teaching Note—Social Work Serial: Using video-recorded simulated client sessions to teach social work practice. Journal of Social Work Education, 54(2), 397–404. https://doi.org/10.1080/10437797.2017.1404525

Baird, S. L. (2016). Conceptualizing anxiety among social work students: Implications for social work education. Journal of Social Work Education, 35(6), 719–732. https://doi.org/10.1080/02615479.2016.1184639

Badger, L. W., & MacNeil, G. (2002). Standardized clients in the classroom: A novel instructional technique for social work educators. Research on Social Work Practice, 12, 364–374.

Bandura, A. (1997). Self-efficacy: The exercise of control. W. H. Freeman and Company.

Ben-Porat, A., Gottlieb, S., Refaeli, T., Shemesh, S., & Zahav, R. R. E. (2019). Vicarious growth among social work students: What makes the difference?. Health and Social Care in the Community, 28(2), 1–8. https://doi.org/10.1111/hsc.12900

Bernstein, J. L., Scheerhorn, S., & Ritter, S. (2002). Using simulations and collaborative teaching to enhance introductory courses. College Teaching, 50(1), 9–14. https://doi.org/10.1080/87567550209595864

Birkenmaier, J., Wilson, R. J., Berg-Weger, M., Banks, R., & Hartung, M. (2003). MSW integrative seminars: Toward integrating course and field work. Journal of Teaching in Social Work, 23(1–2), 167–182. https://doi.org/10.1300/J067v23n01_11

Bogo, M. (2015). Field education for clinical social work practice: Best practices and contemporary challenges. Clinical Social Work Journal, 43(3), 317–324. https://doi.org/10.1007/s10615-015-0526-5

Bogo, M., Rawlings, M., Katz, E., & Logie, C. (2014). Using simulation in assessment and teaching: OSCE adapted for social work. CSWE Press.

Bogo, M., Regehr, C., Logie, C., Katz, E., Mylopoulos, M., & Regehr, G. (2011). Adapting objective structured clinical examinations to assess social work students’ performance and reflections. Journal of Social Work Education, 47(1), 5–18. https://doi.org/10.5175/JSWE.2011.200900036

Bogo, M., Shlonsky, A., Lee, B., & Serbinski, S. (2014). Acting like it matters: A scoping review of simulation in child welfare training. Journal of Public Child Welfare, 8(1), 70–93. https://doi.org/10.1080/15548732.2013.818610

Bonferroni, C. E. (1936). Teoria statistica delle classi e calcolo delle probabilità. Pubblicazioni Del R Istituto Superiore Di Scienze Economiche e Commerciali Di Firenze, (8), 3–62.

Bragg, J. E., Kratz, J., Nay, E. D. E., Miller-Cribbs, J. E., Munoz, R. T., & Howell, D. (2020). Bridging the gap: Using simulation to build clinical skills among advanced standing social work students. Journal of Teaching in Social Work, 40(3), 242–255.

Bragg, J. E., Nay, E. D. E., Miller-Cribbs, J., & Munoz, R. T. (2020). Implementing a graduate social work course concerning practice with sexual and gender minority populations. Journal of Gay & Lesbian Social Services, 32(1), 115–131.

Brown, J. B., Karley, M. L., & Vitali, S. (2002). Building bridges: Perspectives from the field. Canadian Social Work, 4(1), 55–66.

Clapper, T. C. (2010). Beyond Knowles: What those conducting simulation need to know about adult learning theory. Clinical Simulation in Nursing, 6(1), e7–e14. https://doi.org/10.1016/j.ecns.2009.07.003

Clapton, G., Cree, V. E., Allan, M., Edwards, R., Forbes, R., Irwin, M., … Perry, R. (2006). Grasping the nettle: Integrating learning and practice revisited and re-imagined. Social Work Education, 25(6), 645–656.

Costello, M., Huddleston, J., Atinaja-Faller, J., Prelack, K., Wood, A., Barden, J., & Adly, S. (2017). Simulation as an effective strategy for interprofessional education. Clinical Simulation in Nursing, 13(12), 624–627. https://doi.org/10.1016/j.ecns.2017.07.008

Council on Social Work Education. (2008). Educational policy and accreditation standards. Alexandria, VA: Author.

Council on Social Work Education. (2015). Educational policy and accreditation standards. Alexandria, VA: Author.

Dalton, B. (2012). “You make them do what?” A national survey on field seminar assignments. Advances in Social Work, 13, 618–632.

Deal, K. H., Bennett, S., Mohr, J., & Hwang, J. (2011). Effects of field instructor training on student competencies and the supervisory alliance. Research on Social Work Practice, 21(6), 712–726. https://doi.org/10.1177/1049731511410577

DeBenedectis, C. M., Gauguet, J.-M., Makris, J., Brown, S. D., & Rosen, M. P. (2017). Coming out of the dark: A curriculum for teaching and evaluating radiology residents’ communication skills through simulation. Journal of the American College of Radiology, 14(1), 87–91. https://doi.org/10.1016/j.jacr.2016.09.036

Dettlaff, A., & Wallace, G. (2002). Promoting integration of theory and practice in field education: An instructional tool for field instructors and field educators. The Clinical Supervisor, 21(2), 145–160.

Doel, M., & Shardlow, S. (1996). Simulated and live practice teaching: The practice teacher’s craft. Social Work Education, 15(4), 16–33. https://doi.org/10.1080/02615479611220321

Duckham, B. C., Huang, H., & Tunney, K. J. (2013). Theoretical support and other considerations in using simulated clients to educate social workers. Smith College Studies in Social Work, 83(4), 481–496. https://doi.org/10.1080/00377317.2013.834756

Fortune, A. E., Rogers, C. A., & Williamson, E. (2018). Effects of an integrative field seminar for MSW students. Journal of Social Work Education, 54(1), 94–109. https://doi.org/10.1080/10437797.2017.1307149

Gelman, C. R. (2004) Anxiety experienced by foundation-year MSW students entering field placement: Implications for admissions, curriculum, and field education. Journal of Social Work Education, 40(1), 39–54.

Gelman, C. R., & Lloyd, C. M. (2008). Field notes: Pre-placement anxiety among foundation-year MSW students: A follow-up study. Journal of Social Work Education, 44, 173–183.

Hay, K., Dale, M., & Yeung, P. (2016). ‘Influencing the future generation of social workers’: Field educator perspectives on social work field education. Advances in Social Work and Welfare Education, 18(1), 39–54.

Haynes W. (2013) Bonferroni correction. In W. Dubitzky, O. Wolkenhauer, K. H. Cho, & H. Yokota (Eds.), Encyclopedia of systems biology. Springer. https://doi.org/10.1007/978-1-4419-9863-7

Hemy, M., Boddy, J., Chee, P., & Sauvage, D. (2016). Social Work students ‘juggling’ field placement. Journal of Social Work Education, 35(2), 215–228. https://doi.org/10.1080/02615479.2015.1125878.

Issenberg, S. B., Mcgaghie, W. C., Petrusa, E. R., Gordon, D. L., & Scalese, R. J. (2005). Features and uses of high-fidelity medical simulations that lead to effective learning: A BEME systematic review. Medical Teacher, 27(1), 10–28. https://doi.org/10.1080/01421590500046924

Jelley, M., Miller-Cribbs, J., Oberst-Walsh, L., Rodriguez, K., Wen, F. K., & Coon, K. (2016). Addressing the chronically unexplained: Using standardized patients to teach medical residents pragmatic adverse childhood experience (ACE)-based interventions. Journal of General Internal Medicine, 32(Supplement 2), S802. https://doi.org/10.1007/s11606-016-3657-7

Kanno, H., & Koeske, G. F. (2010). MSW students’ satisfaction with their field placements: The role of preparedness and supervision quality. Journal of Social Work Education, 46(1), 23–38. https://doi.org/10.5175/JSWE.2010.200800066

Konzina, K., Grabovari, N., De Stefano, J., & Drapeau, M. (2010). Measuring changes in counselor self-efficacy: Further validation and implications for training and supervision. The Clinical Supervisor, 29(2), 117–127. https://doi.org/10.1080/07325223.2010.517483

Kourgiantakis, T., Sewell, K. M., & Bogo, M. (2018). The importance of feedback in preparing social work students for field education. Clinical Social Work Journal, 47, 124–133. https://doi.org/10.1007/s10615-018-0671-8

Kourgiantakis, T., Sewell, K. M., Hu, R., Logan, J., & Bogo, M. (2019). Simulation in social work education: A scoping review. Research on Social Work Practice 30(4), 433–450. https://doi.org/10.1177/1049731519885015

Lannin, D. G., Guyll, M., Cornish, M. A., Vogel, D. L., & Madon, S. (2019). The importance of counseling self-efficacy: Physiologic stress in student helpers. Journal of College Student Psychotherapy, 33(1), 14–24. https://doi.org/10.1080/87568225.2018.1424598

Larson, L. M., & Daniels, J. A. (1998). Review of the counseling self-efficacy literature. The Counseling Psychologist, 26(2), 179–218. https://doi.org/10.1177/0011000098262001

Larson, L. M., Suzuki, L. A., Gillespie, K. N., Potenza, M. T., Bechtel, M. A., & Toulouse, A. L. (1992). Development and validation of the Counseling Self-Estimate Inventory. Journal of Counseling Psychology, 39(1), 105–120. https://doi.org/10.1037/0022-0167.39.1.105

Lay, K., & McGuire, L. (2010). Building a lens for critical reflection and reflexivity in social work education. Social Work Education, 29, 539–550. https://doi.org/10.1080/02615470903159125

Leake, R., Holt, K., Potter, C., & Ortega, D. M. (2010). Using simulation training to improve culturally responsive child welfare practice. Journal of Public Child Welfare, 4(3), 325–346. https://doi.org/10.1080/15548732.2010.496080

Lee, M., & Fortune, A. E. (2013). Do we need more “doing” activities or “thinking” activities in the field practicum?. Journal of Social Work Education, 49, 446–660. https://doi.org/10.1080/10437797.2013.812851

Logie, C., Bogo, M., Regehr, C., & Regehr, G. (2013). A critical appraisal of the use of standardized client simulations in social work education. Journal of Social Work Education, 49(1), 66–80. https://doi.org/10.1080/10437797.2013.755377

Lu, Y. E., Ain, E., Chamorro, C., Chang, C.-Y., Feng, J. Y., Fong, R., Garcia, B., Hawkin, R. L., & Yu, M. (2011). A new methodology for assessing social work practice: The adaptation of the Objective Structured Clinical Evaluation (SW-OSCE). Social Work Education, 30(2), 170–185.

Manning, S. J., Skiff, D. M., Santiago, L. P., & Irish, A. (2016). Nursing and social work trauma simulation: Exploring an interprofessional approach. Clinical Simulation in Nursing, 12(12), 555–564. https://doi.org/10.1016/j.ecns.2016.07.004

Miller-Cribbs, J, Wells, S., Bragg, J. E., & Miller, G. (2017, October). Addressing grand challenges through innovative simulation. Workshop presented at the Council on Social Work Education 63rd Annual Program Meeting, Grand Challenges Teaching Institute, Dallas, TX.

National Association of Social Workers. (2017). Code of ethics of the National Association of Social Workers. Washington, D.C.: Author.

Nimmagadda, J., & Murphy, J. I. (2014). Using simulations to enhance interprofessional competencies for social work and nursing students. Social Work Education, 33(4), 539–548. https://doi.org/10.1080/02615479.2013.877128

Poe, N. T. & Hunter, C. (2009). A curious curriculum component: The nonmandated “given” of field seminar. Journal of Baccalaureate Social Work, 14(2), 31–47.

Rudolph, J. W., Simon, R., Raemer, D. B., & Eppich, W. J. (2008). Debriefing as formative assessment: Closing performance gaps in medical education. Academic Emergency Medicine, 15(11), 1010–1016. https://doi.org/10.1111/j.1553-2712.2008.00248.x

Sacco, P., Ting, L., Crouch, T. B., Emery, L., Moreland, M., Bright, C., Frey, J., & DiClemente, C. (2017). SBIRT training in social work education: Evaluating change using standardized patient simulation. Journal of Social Work Practice in the Addictions, 17(1–2), 150–168. https://doi.org/10.1080/1533256X.2017.1302886

Schneller, D. P., & Brocato, J. (2011). Facilitating student learning, the assessment of learning, and curricular improvement through a graduate social work integrative seminar. Journal of Teaching in Social Work, 31(2), 178–194. https://doi.org/10.1080/08841233.2011.560532

Shulman, L. (2005). Signature pedagogies in the professions. Daedalus, 134(3), 52–59. Retrieved from http://www.jstor.org/stable/20027998.

Sidell, N. (2015). Social work documentation: a guide to strengthening your case recording (2nd ed.). NASW Press.

Spira, M. & Teigiser, K. (2010). Integrative seminar in a geriatric consortium. British Journal of Social Work, 40(3), 895–910. https://doi.org/10.1093/bjsw/bcp005

Teigiser, K. S. (2009). Field note: New approaches to generalist field education. Journal of Social Work Education, 45(1), 139–146. https://doi.org/10.5175/JSWE.2009.200600056

Wayne, J., Bogo, M., & Raskin, M. (2010). Field education as the signature pedagogy of social work education. Journal of Social Work Education, 46(3), 327–339. https://doi.org/10.5175/JSWE.2010.200900043

Wen, F. K., Miller-Cribbs, J. E., Coon, K. A., Jelley, M. J., & Foulks-Rodriguez, K. A. (2017). A simulation and video-based training program to address adverse childhood experiences. The International Journal of Psychiatry in Medicine, 52(3), 255–264. https://doi.org/10.1177/0091217417730289

Wilcox, J., Miller-Cribbs, J., Kientz, E., Carlson, J., & DeShea, L. (2017). Impact of simulation on student attitudes about interprofessional collaboration. Clinical Simulation in Nursing, 13(8), 390–397. https://doi.org/10.1016/j.ecns.2017.04.004

Wilson, A. B., Brown, S., Wood, Z. B., & Farkas, K. J. (2013). Teaching direct practice skills using Web-based simulations: Home visiting in the virtual world. Journal of Teaching in Social Work, 33, 421–437. https://doi.org/10.1080/08841233.2013.833578

Yule, S., Flin, R., Paterson-Brown, S., Maran, N., & Rowley, D. (2006). Development of a rating system for surgeons’ non-technical skills. Medical Education, 40(11), 1098–1104. https://doi.org/10.1111/j.1365-2929.2006.02610.x