Introduction

A key challenge for schools of social work is developing an evaluation system that meaningfully assesses student professional competencies, is user friendly, and cohesively integrates the educational contract and evaluations. In this article, we describe the process undertaken within our school of social work to overhaul our Master of Social Work (MSW) program field evaluation system. The timing of this overhaul coincided with our school’s implementation of the new Council on Social Work Education’s (CSWE) Educational Policy and Accreditation Standards (EPAS), which were first introduced in 2015 (CSWE, 2015). Because aligning our field education materials and processes with the new EPAS standards would take considerable effort, we used this as an opportunity to improve the field evaluation system as a whole. This two-year, iterative process engaged a field advisory committee of field instructors, MSW students, field staff, and faculty members in conceptualizing the materials, refining the content, and guiding the functionality of the new online and integrated system that was built by the faculty members. This article describes the triumphs and travails of building the new system. This case study will be useful to other MSW programs looking for innovative ways to update their field protocols to comply with CSWE requirements and upgrade their evaluation systems in a resource-constrained environment.

Field education is a signature pedagogy of social work education (Wayne et al., 2010). Within the field component of their curricula, social work programs accredited through CSWE are required to assess nine competencies based on specific student behaviors. Students engage in field assignments and activities that are used to assess student performance on these behaviors. In the overall MSW curriculum, each competency must be assessed in two different ways, with one assessment based on “demonstration of the competency in real or simulated practice situations” (CSWE, 2015, p.18). This requirement is often met by assessing the competencies in field education using end-of-semester field evaluations.

As Hitchcock and colleagues (2019) note, a vital responsibility of field education departments is to manage data effectively. These data include agency information, student placements, timesheets, performance evaluations, and other aspects of field education. Field education departments must decide on a practical and efficient way to manage these data. One option is to purchase a commercially available platform. There are many platforms to choose from that vary in functionality as well as cost (for a more detailed discussion, see Samuels et al., 2020). In this article, we focus specifically on student performance evaluation rather than other functions (e.g., student/agency placements).

The main advantages to using a commercial platform are usability and integration. These platforms offer a single hub to collect and manage information that is available to multiple stakeholders (e.g., students, faculty, field instructors), and offer options to customize features to the school’s data needs. The drawbacks of these systems are the amount of time and expertise needed to select a platform and the costs associated with acquiring and maintaining the system. Making a good software decision involves researching different products and considering IT capacity and legal issues with the school (Samuels et al., 2020). Software platforms, especially ones that have more desirable features and allow greater flexibility, can be prohibitively expensive. Many platforms charge fees per student or per user (e.g., student, field supervisor, advisor), and some companies also charge annual maintenance fees. These extra fees can be financially burdensome for students and for schools that have budget constraints.

A second option for schools of social work is to develop their own field education evaluation system in-house. To do so, schools typically work with their IT department to develop and maintain the system. Two major advantages of this innovative approach are customizability and cost. The evaluation system can be built from the ground up to fit the specific needs and requests of the field department. Even if the school has to cover the cost of IT staff’s time to develop and maintain the system, this is usually much cheaper than the per-user fees and annual fees that schools of social work are subject to when purchasing commercial platforms. There are some drawbacks that come with these advantages. For instance, schools need to have access to the technical expertise to develop the system, and capacity within the field education department to manage it effectively.

Background

To provide context for this article, we give some general information about our school and the evaluation system that was in place prior to the overhaul.

About the MSW Program and Field Education Department

Our school of social work is located in a northeastern state and offers bachelors, masters, and doctoral degrees in social work. The school is medium in size, employing approximately 30 tenure-track faculty and enrolling about 400 MSW students each year. The majority of students complete the MSW program on a full-time basis over the course of two years, although about one-fifth of incoming students elect the three-year or four-year options. The program also offers a one-year advanced standing option for qualifying students who have already completed a BSW degree. MSW students matriculate in one of three concentrations: one focused on individuals, groups, and family practice (IGFP), which enrolls the majority of students; and two concentrations focusing on macro practice, one in community organizing (CO), and another in policy practice (PP).

The SSW’s Field Education Department is staffed by a director, four full-time professionals, and one or more work-study students. Advanced standing students complete one academic-year-long field placement, with all other MSW students completing two academic-year-long placements. The first year of field placement begins with generalist practice and incorporates some concentration activities in the second semester. The second-year field placement enables students to develop specialized skills in their chosen concentration.

All students begin their placements in September and follow one of two options for a weekly schedule. The majority of students are in their field placement for 20 hours per week from September through April, but about one-quarter of students are in field for 15 hours per week, staying in their placement through mid-June. Each student is assigned to an on-site field instructor who provides day-to-day oversight and weekly supervision. Students are assigned to a faculty advisor who is responsible for conducting field placement site visits with each of their student advisees and their field instructor, facilitating small-group seminars with their advisees, and providing academic- and field-related advisement.

Our Previous Field Evaluation Process

As late as the academic year 2017-2018, our existing field evaluation process was aligned with the 2008 CSWE EPAS competencies. The system consisted of an educational contract and two stand-alone online Qualtrics evaluations. A few weeks after a student began their field placement, a blank educational contract worksheet would be emailed to the field instructors and the students. It was expected that the student and field instructor would work collaboratively on completing the educational contract. The most important part of the contract involved identifying and listing planned activities for each of the 10 CSWE competencies. These carefully selected activities were intended to ensure that students would practice and execute behaviors to develop each competency on which they would later be assessed in their end-of-semester field evaluations. In principle, the planned activities would be a useful tool for the field instructors. When it was time to complete the field evaluation, they could retrieve a student’s educational contract and review the planned activities in which the student was observed in action, demonstrating their skills in each CSWE competency. If the planned activities in the educational contract changed during the course of the field placement (e.g., a planned activity was no longer relevant or feasible, or a new planned activity became available), then the existing educational contract form would need to be modified and resubmitted to the Field Education Department. In practice this step was rarely completed.

Educational contracts, whose entries were usually typed but sometimes completed by hand (and had to be signed by the field instructor and faculty advisor), were paper documents that were delivered or mailed, or scanned and emailed, to the Field Education Department for storage. Students were responsible for providing a hardcopy of the completed educational contract to their faculty advisor. Late in the fall semester, field instructors were emailed a link to an online survey evaluation for each student, and the field instructor rated the student on practice behaviors that pertained to each of the 10 CSWE competencies. Field instructors were encouraged to consult the student’s printed educational contact while completing the evaluation.

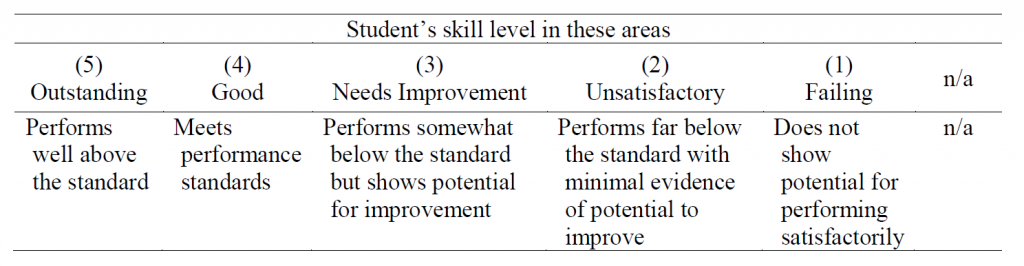

A five-point Likert scale was used to evaluate each practice behavior, which ranged from 1 (Failing) to 5 (Outstanding) (see Table 1). There was also a sixth “n/a” option, but instructions were not provided as to when and under what circumstances this should be selected. Once the evaluation was completed, the Field Education Department would individually email a copy of the evaluation results for each student to the field instructor, faculty advisor, and student. Finally, near the end of a student’s field placement, the evaluation process would be repeated—field instructors would complete the evaluation online and results would be emailed individually to the relevant parties.

At the time the new system was developed, this evaluation process had been used for years. The Field Education Department occasionally received informal feedback about various aspects of the system, typically when stakeholders were frustrated. However, users’ perceptions of the system or the extent to which it fulfilled its intended objective of meaningfully assessing student field performance had not been formally evaluated. We decided to use our upcoming realignment with the 2015 EPAS standards as an opportunity to critically examine our field evaluation system and make needed changes.

Table 1

Previous Rating Scale

Methods

Beginning in fall 2017, the School’s Field Advisory Committee (FAC), which included the authors of this article, spearheaded a two-year initiative to examine and overhaul the field evaluation system. The FAC is a voluntary committee that includes representatives from the School including Field Education Department staff and director, field instructors to represent the experience in the field, and faculty advisors and students from each concentration. The FAC contained representation from the key stakeholder groups who use the evaluation system. While the field evaluation system is not a social service program, the FAC followed the best practices of program design: assessing the need and analyzing the problem from multiple stakeholder perspectives, and then designing, testing, implementing, and finally evaluating the program (Kettner et al., 2015).

First, the FAC gathered stakeholder input to identify significant, but changeable, issues with the existing system. Second, the FAC compiled recommendations for the new system. Third, in collaboration with the FAC, faculty members serving on the FAC built a fully online, Qualtrics-based system that incorporated the recommendations. Fourth, several internal rounds of pilot testing were conducted, followed by a year-long testing of the beta version. Fifth, at the end of the beta year, a brief online survey was administered to field instructors about the usability of and their satisfaction with the new system. This guided final adjustments to the system when it was fully implemented in academic year 2019-2020. The findings section summarizes the key results of each step in the process.

Findings

Step 1: Define and Analyze the Problem Through Stakeholder Input: Identify Significant but Changeable Issues

One of the chief complaints about the existing system was that it was cumbersome to use. The educational contract was a writable PDF that had to be completed; printed; hand-signed by the student, field instructor, and faculty advisor; rescanned; and then sent to the Field Education Department. The resolution of these documents was sometimes of poor quality, and it was not uncommon for one or more of the stakeholders to misplace the document. A minor adjustment in a student’s educational contract (e.g., change to a planned activity) required the laborious steps to be repeated, which created multiple versions of the student’s contract, and in practice was rarely done. Field Department staff had to manage this influx of 300+ educational contracts and check them for completion (e.g., ensuring a page was not missing). Because each contract was stored as a separate PDF document, there was no easy way to compile or analyze information across contracts, such as assessing the types of planned activities listed for Competency 9 for IGFP students. Managing the PDFs involved a lot of bookkeeping, particularly when different versions of the educational contract were created or when students changed placements.

Another major complaint was that the relationship between CSWE competencies, example practice activities, planned activities in the educational contract, and evaluated practice behaviors was often unclear and elusive. This was in part because the relationships were not clearly explained in the instructions. As a result, some students and field instructors described that completing the educational contract felt like a rote activity that was disconnected from later evaluations of student proficiency.

The disconnection was also due to the way the system was structured. Ideally, a field instructor would have a copy of a student’s educational contract in hand as they completed the student’s evaluation. This would have allowed them to see the planned activities that were applicable to each CSWE competency. Evaluations were structured around the competencies rather than by a student’s performance on specific activities. This allowed the same evaluation to be used across placement sites, but made it difficult for field instructors to evaluate a student’s day-to-day actions in relation to the competencies. In practice, field instructors did not always have the educational contract with them to link activities to competencies; some field instructors forgot to retrieve the final educational contract from the student, did not have time to hunt their copy down, or misplaced the form. Consequently, the planned activities that aligned with each competency (from the educational contracts) was not readily identifiable when completing the evaluations.

Another point of disconnection was between the fall evaluation and the spring evaluation. When completing the end-of-year evaluations, not having a student’s midyear evaluation scores handy made it difficult to assess their growth over the second half of the field placement. This was important both for providing the most accurate rating and for writing the descriptive summary of the student’s progress.

A third set of concerns pertained to the five-point rating scale used in the assessments. The evaluation scoring was ineffective at identifying student strengths and areas of improvement. The “outstanding” score (which by definition should be interpreted as performing well above the standard) was selected at high rates. Additionally, when field instructors selected the “n/a” response, no information was obtained about the reason for this score. The five-point scale also did not match CSWE’s competency-focused assessment (i.e., distinguishing between students who were and were not proficient in a competency or a specific practice behavior).

There were also several other concerns raised by stakeholders. Some students reported that they did not always see or receive a copy of their evaluations. Some faculty advisors received only paper copies of their students’ educational contracts. The example practice activities listed in the educational contract were generic and not specific to each concentration, and were heavily focused on micro practice, making it more challenging for field instructors in the macro concentration to develop the contract. Formatting in the writable PDF was sometimes difficult and caused frustration. The combination of these issues led many users to have dissatisfied or even frustrating experiences.

Step 2: Designing the Program by Compiling Recommendations for the New System

In light of the significant limitations of the existing system, the members of the FAC were asked to obtain feedback from other constituents so that the perspectives of all the stakeholders could be included. The FAC met several times as a group to discuss and compile a list of recommendations. Recommendations focused on improving the connections between all of the pieces of a student’s field education experience and reducing frustration and increasing usability for field instructors. Student representatives of the FAC emphasized the importance of ensuring that they received copies of their educational contract and evaluations, and faculty advisor representatives requested that these forms be electronic rather than hardcopy. Field instructor and student representatives also asked that students consistently be given an opportunity to provide feedback on their fall and spring evaluations. To address these recommendations, the group identified possible solutions.

One approach that came out of these discussions was to develop a fully online system that discarded the unwieldy PDF version of the educational contract. In the new system, the embedded instructions would clearly explain how the different components (i.e., competencies, planned activities, behaviors) fit together. The new system would also integrate the educational contract and evaluations. Specifically, practice activities identified in the educational contract would automatically appear next to each competency in the midyear and end-of-year evaluations. This would give field instructors the specific activities for each student in which the student had an opportunity to demonstrate the competency. Students’ midyear evaluation scores would automatically populate next to each behavior in the end-of-year evaluation, which would enable field instructors to better assess growth in student proficiency.

Having everything online had several important benefits. It allowed the Field Department to more easily monitor and track the different documents throughout the year, facilitated the integration of the different documents to improve the user experience, and increased the amount and type of data that were available to analyze easily. Further, the fully online system allowed for flexibility in circumstances when in-person or paper copies were not feasible or practical (e.g., during the COVID-19 pandemic, when field staff, faculty advisors, and many students and field instructors were working remotely).

The FAC also recommended that an educational contract worksheet be created that was customized to each concentration. For example, instead of having generic sample planned activities, each worksheet would have sample planned activities that were tailored to the typical learning opportunities of each concentration. These would be helpful to students and field instructors when completing the educational contract, and would be particularly useful for first-time field instructors. A final recommendation was to develop a relevant, competency-based evaluation scale to evaluate the CSWE competencies and behaviors. These recommendations were also adopted and integrated into the system.

Step 3: Building a Fully Online System That Incorporated Stakeholder Recommendations

From spring to summer 2018, faculty members who were part of the FAC began constructing a fully online evaluation system in the survey platform Qualtrics. This was an iterative process, in which new features were built into the system, presented to the FAC for feedback, and revamped. Since Qualtrics is designed to administer surveys rather than to be used as an ongoing system of evaluation, the lead faculty member had to learn and employ certain technical features of Qualtrics in order for it to function as desired. The new system also incorporated the new 2015 EPAS behaviors associated with each competency for the generalist year developed by CSWE and the behaviors associated with each competency that the SSW faculty developed for each of the three specialized-year concentrations. The generalist year plus three specialized-year concentrations (IGFP, CO, PP), combined with the two field timelines (20 hours/week and 15 hours/week placements), meant that eight distinct evaluations were developed. The system was built to be user friendly for field instructors, faculty advisors, and students, as well as flexible for field education staff and useful for reporting to CSWE regarding the percentage of students who met the SSW-selected benchmarks for demonstrating competency on each of the nine competencies.

A Tour of the New Field Evaluation System

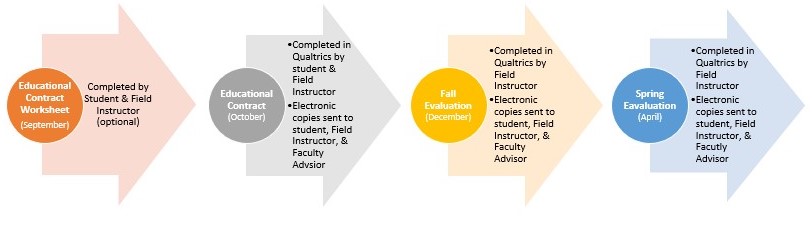

In this subsection, we walk readers through the experience of using the new system from a user’s perspective, drawing attention to certain features. A timeline of the main field planning and evaluation activities is displayed in Figure 1.

Figure 1

Timeline of Field Evaluation Activities1

Completing the Educational Contract Worksheet. The preplanning phase for the work to be accomplished in the placement takes place in September, a few weeks after students begin their field placement. Students and field instructors are emailed an educational contract worksheet that is specific to their year in field and concentration (generalist year, and specialized-year IGFP, PP, or CO). The worksheet provides easy-to-follow instructions on how to use the worksheet, as well as an explanation of key terms (e.g., competencies, behaviors, planned activities) and how they are related to the assessment process. The purpose of the worksheet is to provide students with a tool to begin working collaboratively with their field instructor to identify planned activities that align with CSWE competencies. In a separate document is a table listing dozens of sample activities that are specific to the student’s year in field and concentration. The table displays which of the nine CSWE competencies each example activity aligns with. The example activities were developed by members of the FAC and can be referenced as students and their field instructors develop planned activities that are specific to their field placement. This worksheet is a writable PDF, which allows field instructors to easily copy and paste the planned activities later into the actual educational contract.

Completing the Educational Contract. In October, field instructors are emailed a unique survey link for each of their student interns. Qualtrics’ mailing system is programmed to automatically distribute the emails and to populate the student’s name in the body of the email. When the link is clicked, the educational contract opens in the field instructor’s web browser. Like the email, the educational contract is personalized with the field instructor’s and the student’s names in the body of the instructions. The instructions remind the field instructors to retrieve their educational contract worksheet and clearly describes how the planned activities will be used as reference in later evaluations to assess student competency. For each of the nine CSWE competencies, field instructors are directed to type (or copy and paste) two to four planned activities that align with the competency. Each competency is listed on a single page. The competency is first described, followed by sample planned activities (taken from the worksheet) and a text box into which field instructors type the planned activities. After field instructors complete the educational contract, Qualtrics is programmed so that a copy of the contract is automatically emailed to the field instructor, faculty advisor, and student. This ensures that all relevant parties receive a copy of the contract.

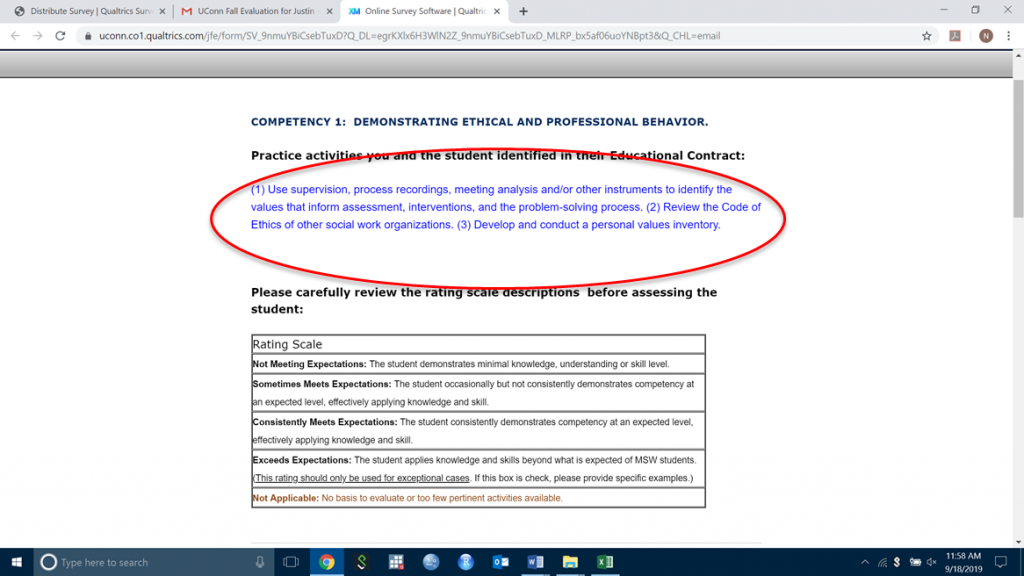

Completing the Fall Evaluation and Updating Practice Activities for the Spring. Later in the fall semester, an email is automatically sent from Qualtrics to the field instructor with a link to the student’s fall evaluation. The email and the evaluation are both customized, with the field instructor’s and the student’s names filled in. Following brief instructions for completing the evaluation, the field instructor advances to the first CSWE competency. On this page are the planned activities from the student’s educational contract, in bright blue ink; a table that defines the four-point evaluation scale; and the list of specific behaviors that the student is evaluated on for the competency. See Figure 2 for a screenshot example of the populated planned activities (circled in red) and the rating scale description.

Figure 2

Example of the Student’s Planned Activities Appearing in the Fall Evaluation.

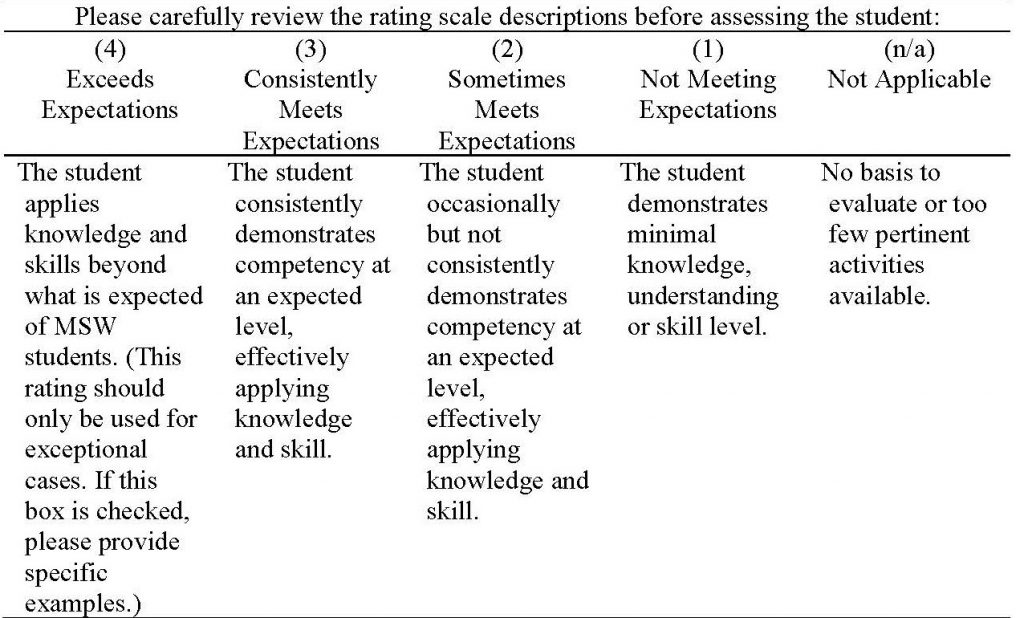

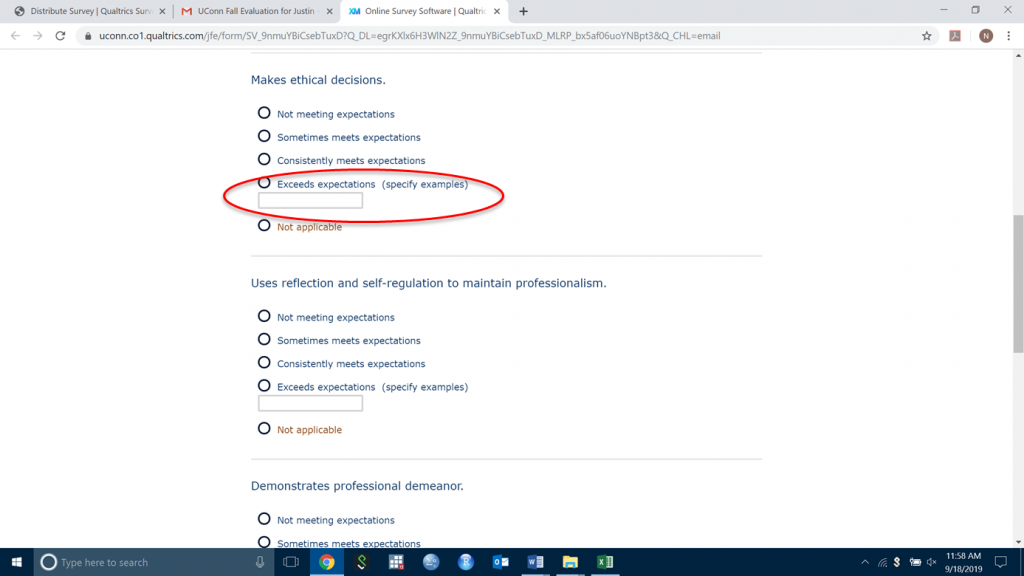

We developed a four-point rating scale to evaluate the competency of student behaviors (see Table 2). The scale includes: “Not meeting expectations,” “Sometimes meets expectations,” “Consistently meets expectations,” and “Exceeds expectations.” There is also a fifth “Not applicable” option. This four-point scale was developed by the FAC after reviewing several rating scales used by peer institutions, referring to scale development literature (e.g., DeVellis, 2017), and engaging in several lengthy discussions. We chose to use a competency-based scale that assesses whether a student is performing the behavior proficiently and how frequently they perform it proficiently. Compared to the old scale, the distinctions between categories are more nuanced and substantively meaningful. This is particularly the case for students who are progressing but not yet proficient; the four-category scale forces a clearer rating. If a field instructor selects the “Exceeds expectations” rating, then Qualtrics requires them to provide a specific example of student behavior that justifies this rating. This feature was designed both to discourage overuse of this rating (i.e., field instructors cannot simply click this rating and move on) and to provide the faculty advisor and Field Education Department with detailed information about exceptional student performance that warrants this score. Because the new rating scale is different than the Likert scales that had been used in the past, additional instructions defining each category were built into the online system. See Figure 3 for a screenshot of the fall evaluation page.

Table 2

New Rating Scale

Figure 3

Example of Required Justification for an “Exceeds Expectations” Rating

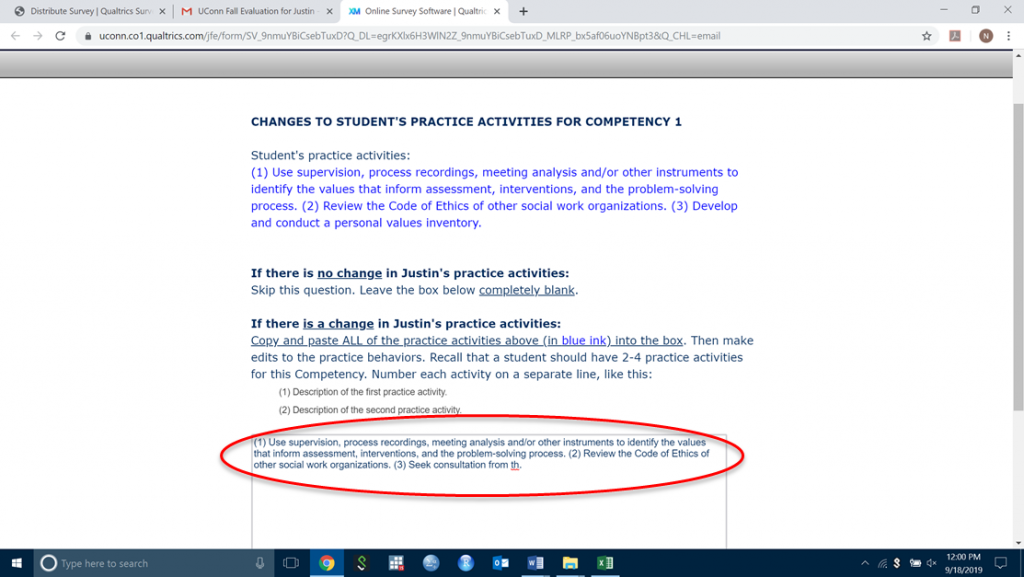

Near the end of the fall evaluation, field instructors have an opportunity to modify the student’s planned activities for each competency. As shown in Figure 4, the original planned activities are displayed in blue ink for the field instructor to review. If planned activities are the same, the field instructor can leave the text box below blank and move to the next competency. If modification is needed, the field instructor can simply copy and paste the original planned activities into the text box and edit as needed. Any changes made to the planned activities will be saved in Qualtrics. After reviewing the planned activities for each competency, field instructors write a short summative comment about the student’s performance in their field placement.

One of the final pages of the fall evaluation lists any behaviors for which the field instructor gave a “Not applicable” response. A short paragraph draws the field instructor’s attention to these behaviors and instructs the field instructor to make sure they have an opportunity to observe and evaluate these behaviors prior to the spring evaluation. The purpose of this page is to minimize “Not applicable” responses and ensure that students are observed and evaluated on as many of the CSWE behaviors as possible. This also allows for situations in which the behavior has not been observed but field instructors might have felt they needed to provide a score. It also recognizes that activities related to some competencies may be scheduled for the second semester of a placement, and would not be expected to occur before the fall evaluation. For example, many of the macro practice educational contracts involve engagement with the state legislature, which is in session only in the spring.

Figure 4

Example of Field Instructor’s Opportunity to Revise Student’s Planned Practice Activities

After the fall evaluation has been completed, Qualtrics automatically sends copies of the evaluation and updated planned activities to the field instructor, faculty advisor, and student. Additionally, a separate email is sent to the student with a link to a short Qualtrics survey that confirms their receipt of the fall evaluation and gives them an opportunity to share reactions to or comments with their field instructor and faculty advisor. The students’ confirmation and comments (if applicable) are automatically emailed to the field instructor and faculty advisor. Students are directed not to disclose sensitive comments in this survey if they do not wish both their faculty advisor and field instructor to see them, but instead to reach out to their faculty advisor or field instructor individually to address such concerns.

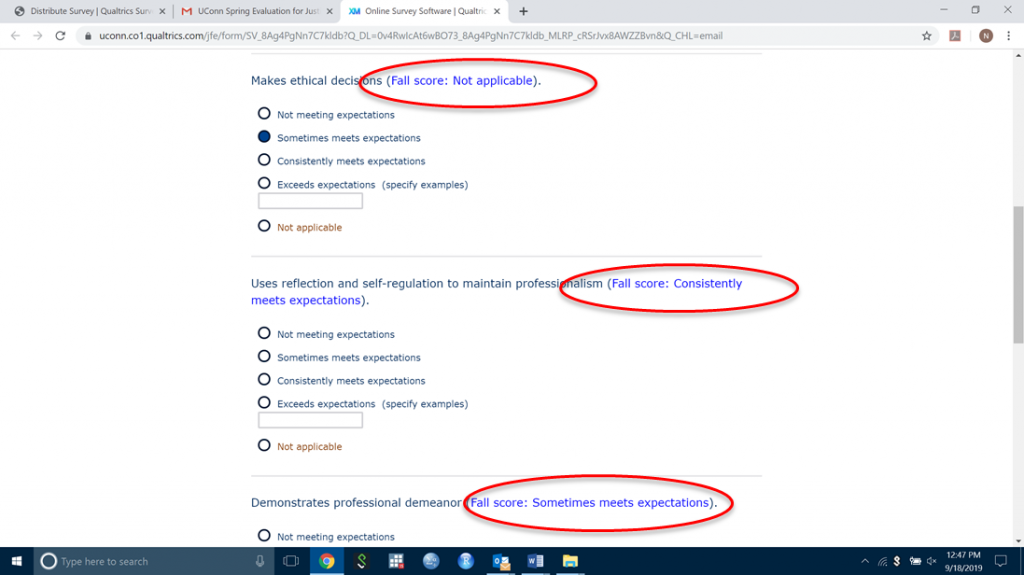

Completing the Spring Evaluation. The final component of the new evaluation system is the spring evaluation, which is completed in April for 20-hour-per-week students and in June for 15-hour-per-week students. Similar to the fall evaluation, field instructors are sent personalized emails with unique links and see the student’s planned activities when assessing the behaviors for each competency. An important feature of the spring evaluation is that Qualtrics is programmed so that the student’s fall score appears on screen when the field instructor is assessing the student (see Figure 5). This gives the field instructor a reference point for each behavior from which to assess the student’s current level of proficiency compared to their level at the end of the fall semester. Similar to the fall evaluation, field instructors must provide a written justification for “Exceeds expectations” scores. Additionally, if they select “Not applicable” for any behavior, a new screen appears with a text box in which they must provide an explanation of why this particular skill could not be evaluated. This provides important information to the faculty advisor and Field Education Department about potential barriers that are present within a placement (or across placements) that hinder assessment of the behavior. The field instructor also answers a question attesting to the total number of hours completed by the student at their field placement that year. At the completion of the spring evaluations, the results are automatically emailed to the field instructor, faculty advisor, and student, and the student is sent a separate link to confirm receipt and provide feedback on their evaluation.

Figure 5

Example of Student’s Fall Score Appearing in the Spring Evaluation

In academic year 2018-2019, a beta version of the new field evaluation system was launched with all MSW students. One Field Education Department staff member was primarily responsible for administering the system, with technical support from the faculty member who programmed Qualtrics. Nearly all of the features in Qualtrics worked as planned. However, there was one bug that did not appear until several weeks into use and then had to be fixed, at a considerable cost of time. The problem was eventually identified as a default setting in Qualtrics that automatically closes a survey (e.g., the educational contract) if it had been opened but no information had been entered for two weeks. This affected a few dozen field instructors, who had to have links resent to them. After learning about this issue, the default setting was changed and that problem was solved. An important task for the beta-test year was for the Field Education Department staff to keep track of the details of the various scenarios that required special attention in Qualtrics. These all involved students who changed field instructors and/or placements. However, depending on the specific details of the situation (such as when the transition took place), several different courses of action had to be developed.

Step 5: Evaluating the System Through a Feedback Survey

A brief survey was developed in Qualtrics and emailed to field instructors in spring 2019. The survey was designed to take approximately 10 minutes to complete, and included both closed- and open-ended questions about features of the new field evaluation system. A total of 54 field instructors took the survey, which was a response rate of 25%. Given the low response rate, the findings reported below should be interpreted with caution, as they may not be representative of the entire population of field instructors.

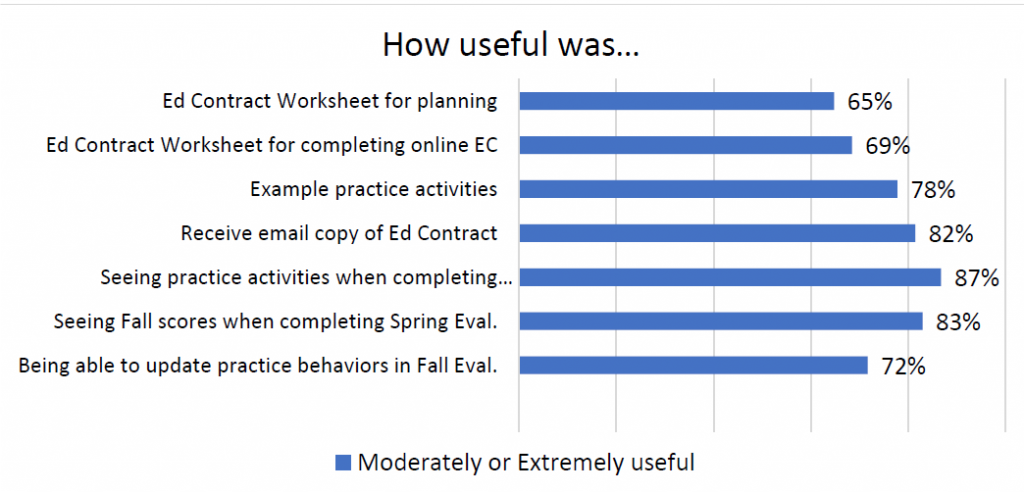

One set of questions asked field instructors to rate how useful different components of the new system were on a five-point scale from 1 = “Extremely useless” to 5 = “Extremely useful.” Figure 6 displays the percentage of respondents who selected the top two responses (4 = “Moderately useful” or 5 = “Extremely useful”). About two-thirds of all respondents viewed each of the components assessed as being useful. The components of the new system that were especially useful (>80%) were (1) automatically receiving an email copy of the educational contract; (2) having practice activities from the educational contract automatically appear in the evaluations; and (3) being able to see a student’s fall scores when completing the spring evaluations.

Figure 6

Results from the Survey of Field Instructors after the Pilot Year (n = 54)

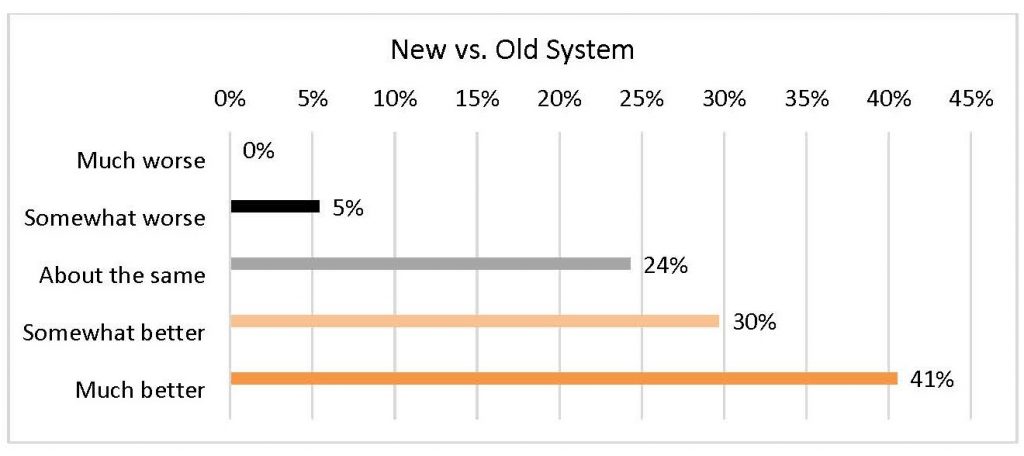

We asked respondents who had served as a field instructor in previous years to compare their experiences with the old evaluation system and the new system. Of the respondents, about 30% (n = 16) were first-time field instructors and were not asked this question. To separate the content and coordination of the system from technical issues that had already been addressed, the question read: “Other than technical glitches, overall, how was using the new system compared to using the previous system?” Results are displayed below in Figure 7. Overall, the majority of respondents felt that the new system was better than the old system (71%), with the most common response being that the new system was “much better” than the old system. About one-quarter of respondents viewed the systems as being about the same, and just 5% viewed the new system less favorably than the old system.

Figure 7

Returning Field Instructors’ Perspectives on the New Field Evaluation System Compared to the Previous System (n = 37)

Three open-ended questions asked respondents to provide specific comments or suggestions about the educational contract, fall and spring evaluations, and the new system overall, respectively. Most of the responses about the new components and system were positive. One field instructor wrote the following about the educational contracts: “It is nice not to have to re-invent the wheel with Ed Contracts. Makes it clear what is expected, is a good starting place to discuss learning needs for the year.” Another field instructor commented on ease of use with the new system: “I found it much more user friendly than previously and having it emailed was great in order to have it available as we moved through the semesters.” A field instructor commented that the new system was more efficient: “The online process was very helpful and was more efficient in completing than the PDFs.” Some field instructors were accustomed to the old system and liked having a printable version of the educational contract and evaluations that they could review with students, and others did not like the format of the evaluations that were sent to them via email.

Discussion

This article described the process of overhauling the field evaluation system in response to issues raised from stakeholders about its efficiency, usability, and validity. The process of identifying limitations of the existing system, proposing recommendations, and building, refining, and implementing the new system occurred over two years. Being mindful of the low response rate, the majority of field instructors found the new system an improvement over the old system, particularly in the areas of getting rid of PDFs, streamlining the evaluation components, and automatically sending completed documents to the relevant people. We conclude by identifying some important applications of the new system and reflecting on some lessons learned, which are organized as successes and challenges.

Application and Usability

One of the advantages of the new system is that the data are easier to analyze, particularly for subgroups. For example, one of the strengths of the new system is that data from each concentration (e.g., 15-hour generalist-year students) can be downloaded from Qualtrics as a single data file (e.g., Excel or SPSS). In summer 2019, data from the eight student cohorts were saved and data management syntax was written that would clean and combine all datasets into a single dataset. Although the data management work was time intensive the first time, it will be reused in future years (with slight modifications) to quickly clean and analyze the field education data so that they are ready to report to CSWE.

Reporting to CSWE for reaffirmation and annual reporting can also be done easily with the data from the new system. The reaffirmation documentation requires schools of social work to calculate and report the percentage of students meeting the benchmark for each competency, and explain how the program has calculated that percentage. In the new system, a student is classified as “proficient” in a competency when they are rated as proficient (i.e., “Consistently meets expectations” or “Exceeds expectations”) in a specified number of behaviors that measure the competency2. Next, the percentage of students who are proficient on each competency is calculated, as well as the percentage of students who are proficient on all nine competencies. There is no mean score when the rating scale is applied as a categorical measure (i.e., proficient vs. not proficient). One positive result of this choice is that the competency of each cohort of students is more transparent, because the competency of a student with a weaker performance in field is not obscured by students with high or superlative scores.

Successes With the New System

Overall, the new system more explicitly and coherently connects the CSWE competencies and behaviors, planned activities, and students’ daily field experiences. Additionally, there is a closer linkage between the educational contract and later evaluations, which helps to elevate the relevance and importance of both to students’ growth in field.

A major objective was to make the new system easier to use than the old system. While some field instructors preferred aspects of the old system, overall, the new system is easier to use and more efficient for all parties. This includes the Field Education Department, who can more easily monitor, change, and keep educational contracts current. Field Education Department staff can also more easily monitor compliance with deadlines and send out reminders that are targeted to field instructors who have not yet completed the educational contract or evaluation.

The new system also helps to ensure that the key individuals responsible for a student’s success in field, namely the student, field instructor, faculty advisor, and Field Education Department, are all working from the same information. This helps to close gaps in information that occurred with the old system, which resulted in people feeling out of the loop and on different pages. The student confirmations that are built into the system ensure that students have received the evaluations, and creates an additional feedback loop via their comments to the field instructor and faculty advisor about the evaluations.

Several aspects of the scoring system and process in the new system make the evaluations more informed and consequently a more accurate assessment of student competency. The new rating scale explicitly evaluates students on the extent to which they consistently demonstrate practice skills. As described earlier, the four-point scale can be easily cut into meaningful proficiency groups (proficient = Exceeds expectations/Consistently meets expectations, not proficient = Sometimes meets expectations/Does not meet expectations) for CSWE reporting. Populating the evaluations with planned activities and carrying over fall evaluation scores into the spring evaluation make the evaluations more grounded in practice, focused on growth, and ultimately informed. Safeguards were also built in to counteract grade inflation and the use of the “Not applicable” response. Although results are preliminary, early evidence suggests that requiring field instructors to write in justification for an “Exceeds expectations” score may have reduced how prevalently it is used. In prior years, the highest score (5 = Outstanding) was the modal rating for many behaviors, but now it is only used for fewer than one in five students in most behaviors, and less than one in ten students for many behaviors. This is a more appropriate distribution for what should be a rare score. The “Not applicable” write-in response also allows the Field Education Department to identify trends in behaviors that are consistently difficult to demonstrate and particular field placements or types of placements where barriers may exist to meaningfully assessing students in certain behaviors.

Challenges With the New System

Many of the challenges with the new system involved the heavy lift involved in setting up the system, working out the bugs, and acclimating users to the new system. Building a system that had many of the features recommended by the FAC required Qualtrics expertise. Another challenge was that not all of the problems were caught in pilot testing, and this required substantial time from the Field Department staff to troubleshoot. Processes and procedures also had to be developed for dealing with special situations, such as changes in field placements and personnel, and evaluation of first-year macro students in a simulated micro practice skills lab. Manuals had to be drafted on how use the new system, and staff had to learn to use Qualtrics. This also included developing a new way to save files efficiently and monitor and track various aspects of the new system. Although staff were open to changes and became adept in the process after the beta year, learning and following the new processes took time and periodically was frustrating for staff.

Two specific lessons learned pertained to the educational contract and the worksheet. First, the worksheet stated that the sample practice activities could be used verbatim in students’ educational contracts. This resulted in heavy reliance on the sample activities in a noticeable portion of the educational contracts. In the subsequent year, we changed the language in the worksheet to state explicitly that the sample activities were for reference only and should not be used verbatim. This was done to promote the development of individualized practice activities specific to each student’s field work. The rate of verbatim statements declined drastically.

Second, we learned through trial and error about firewalls that existed in certain agencies or organizations. The firewalls prevented the emails with links to the educational contracts and the evaluations from getting to the field instructors, despite the fact that steps were taken to reduce this risk (e.g., the email sender was the Field Education Department rather than a generic Qualtrics email address). We had to develop a workaround for these cases. Specifically, the Field Education Department sent out a separate email asking field instructors to reply if they had not received an email with a link. The Office then sent the links individually to each field instructor or used an alternative email address that did not have a firewall issue.

Conclusion

Field education is a central component of social work education, and a coordinated, well-planned field evaluation system facilitates student learning and makes for a smooth and easy process for faculty advisors, field instructors, field departments, and students. The field evaluation system described here has improved and streamlined the assessment of field education at the School. The new system has also proved to be effective and efficient during the massive shift to virtual settings required by the COVID-19 pandemic. What began as an effort to improve the coordination between the key parties developed into a comprehensive, multi-component system that strengthened several aspects of field education. The new system allows field instructors and students to better plan, monitor, and evaluate students’ field experience, and the Field Department to track the forms and results more consistently. Having information accessible electronically and across documents (e.g., the educational contract information populated automatically into the evaluations) reduces frustrations for field instructors and faculty advisors. The data available on the back end allow for a more thorough and nuanced analysis of individuals, subpopulations, and field education as a whole. This article describes the process and end product in detail, to provide ideas or even to guide other social work programs who want to modify or improve aspects of the system their field department uses in a cost-effective way that also builds internal capacity.

References

Council on Social Work Education (2015). 2015 educational policy and accreditation standards. https://www.cswe.org/Accreditation/Standards-and-Policies/2015-EPAS

DeVellis, R. F. (2017). Scale development: Theory and applications (4th ed.). Sage.

Hitchcock, L., I., Sage, M., Smyth, N. J., Lewis, L., & Schneweis, C. (2019). Field education online: High-touch pedagogy. In L. Hitchcock, M. Sage, & N. J. Smyth (Eds.) Teaching social work with digital technology (pp. 259–307). Council on Social Work Education.

Kettner, P. M., Moroney, R. M., & Martin, L. L. (2015). Designing and managing programs: An effectiveness-based approach. Sage Publications.

Samuels, K., Hitchcock, L. I., & Sage, M. (2020). Selection of field education management software in social work. Field Educator, 10(1), 1–23. https://fieldeducator.simmons.edu/article/selection-of-field-education-management-software-in-social-work/

Wayne, J., Bogo, M., & Raskin, M. (2010). Field education as the signature pedagogy of social work education. Journal of Social Work Education, 46(3), 327-339. https://doi.org/10.5175/JSWE.2010.200900043

[1] Note: The timeline pertains to students completing 20 hours per week in field. For students completing 15 hours per week, the Fall Evaluation occurs in January and the Spring Evaluation occurs in June.

[2] Each CSWE competency is evaluated by two or more behaviors. A student is proficient on a competency if they were rated as being proficient (i.e., “Consistently meets expectations” or “Exceeds expectations”) on a minimum number of behaviors within that competency. If the competency is assessed by five behaviors, a student must be proficient in four or more behaviors. If the competency was assessed by four behaviors, the student must be proficient in three or more behaviors. If the competency was assessed by three behaviors, the student must be proficient in two or more behaviors. If the competency was assessed by two behaviors, student must be proficient in both behaviors.